This post is a continuation of the previous post where we setup our ZCU106 board for Ubuntu 20.04 LTS. In this post we’re going to build the hardware platform that is built into the Certified Ubuntu 20.04 LTS images for the ZCU106 board. The reason that we would want to be able to do this is so that we can customize the hardware platform - perhaps add functionality, add external connections, or add accelerator IP. In this post we wont actually modify the design, we’ll just build it and then verify that it works on the hardware. In a later post we will actually modify the platforms. My objective at that time will be to add support for the RPi Camera FMC.

Although you don’t need to have done the previous post in order to build the hardware platform here, you will need to have done it if you want to test the hardware.

Hardware platform for ZCU106

The hardware platform for ZCU106 that is built into the Ubuntu 20.04 LTS release is the VCU HDMI ROI Targeted Reference Design (TRD) for ZCU106. Follow that link for a detailed description of the hardware platform, including how it works, how to build it and how to use it. It might be interesting for some people to know that there is a similar TRD for the ZCU104 board that is built into Ubuntu 20.04 LTS for that board, and it is possible to follow these instructions to build that design too.

Hardware requirements

Here’s what you will need in terms of hardware:

- Linux PC (Red Hat, CentOS or Ubuntu)

- ZCU106 Zynq UltraScale+ Development Board

- DisplayPort monitor and cable

- USB Webcam

- USB keyboard and mouse

- USB hub (since the ZCU106 has only one USB port)

- Ethernet cable to a network router (for network and internet access)

Software requirements

We’re going to build this platform using a PC running Ubuntu 18.04 LTS, but you can use a different version of Ubuntu, or even Red Hat or CentOS, as long as it is one of the supported OSes of PetaLinux 2020.2. Here is the main software that you will need to have installed on your Linux machine:

Vitis and PetaLinux 2020.2

If you need some guidance to installing Vitis and PetaLinux 2020.2, I’ve written a guide to installing the older version 2020.1 here. In that post we installed it onto a virtual machine, but for this post I highly recommend using a dedicated machine if you can because it’s quite a big project and the build time can be extremely long on a VM.

Y2K22 bug patch

You will run into build issues if you don’t install the Y2K22 bug patch. Follow that link, download the y2k22_patch-1.2.zip file from the attachments at the bottom of the page, extract it to the location where the Xilinx tools were installed (in my case ~/Xilinx/) and then run it:

cd ~/Xilinx

unzip ~/Downloads/y2k22_patch-1.2.zip -d .

export LD_LIBRARY_PATH=$PWD/Vivado/2020.2/tps/lnx64/python-3.8.3/lib/

Vivado/2020.2/tps/lnx64/python-3.8.3/bin/python3 y2k22_patch/patch.py

That assumes that your Xilinx tools were installed in the ~/Xilinx directory and that the patch file was downloaded to the ~/Downloads/ directory.

XRT 2020.2

Download the XRT 2020.2 rpm/deb file that is appropriate for your OS, using the following links:

Then you need to install it by running the installer. In Ubuntu this is done with the following command (assuming the file has gone into the ~/Downloads/ directory):

sudo apt install ~/Downloads/xrt_<version>-xrt.deb

For other OSes, please refer to the XRT installation guide. It should install to /opt/xilinx/xrt.

HDMI IP license

To build these designs we need to get a license for the HDMI Transmitter and Receiver Subsystem IPs. We can generate a single evaluation license that covers both of those IP by using the following link: LogiCORE, HDMI, Evaluation License.

Create a node locked license and copy the license file to the ~/.Xilinx directory.

Assumptions

Let me just make clear some assumptions so that you can adjust my commands to suit your own situation.

-

I’ll assume that when you download files, they end up in

~/Downloads/. -

I’ll assume that you have chosen these locations for your installs:

Software Install location Vitis 2020.2 ~/Xilinx/PetaLinux 2020.2 ~/petalinux/2020.2/XRT 2020.2 /opt/xilinx/xrt/

Build Instructions

Now let’s get to actually building the hardware platform.

zcu106 references to zcu104. You will also want to pay attention to any of these notes along the way.Download sources

-

Create a directory for working in and an environment variable to hold the path of our working directory. Change the actual path to one that you would prefer, I’m using

~/zcu106_vcu_hdmi_roi.cd ~ mkdir zcu106_vcu_hdmi_roi export WORK=~/zcu106_vcu_hdmi_roi -

Download the source code package from the following link:

ZCU104 note: Here’s the link for the VCU HDMI ROI TRD package for ZCU104 for those who are using that board instead. -

Extract the file contents to

$WORK:unzip ~/Downloads/rdf0617-zcu106_vcu_hdmi_roi_2020_2.zip -d $WORK/. cd $WORK/rdf0617-zcu106_vcu_hdmi_roi_2020_2ZCU104 note: If you’re using the ZCU104, you’ll need to replace the TRD name above tordf0428-zcu104-vcu-hdmi-roi-2020-2

Vivado build

-

Source the settings script for Vivado:

source ~/Xilinx/Vivado/2020.2/settings64.sh -

Create an environment variable to hold the path of the TRD:

export TRD_HOME=$WORK/rdf0617-zcu106_vcu_hdmi_roi_2020_2ZCU104 note: If you’re using the ZCU104, use this instead:export TRD_HOME=$WORK/rdf0428-zcu104-vcu-hdmi-roi-2020-2 -

Run the script to build the Vivado project and generate the bitstream and XSA.

This will open the Vivado GUI, construct the block diagram and then generate the bitstream and XSA. When it has finished, you can close down the Vivado GUI.

cd $TRD_HOME/pl vivado -source ./designs/zcu106_ROI_HDMI/project.tcl

The main output product of the Vivado build is the .xsa file which will be needed by the PetaLinux tools and Vitis in the following steps.

PetaLinux build

-

Source the settings script for PetaLinux:

source ~/petalinux/2020.2/settings.sh -

Create the PetaLinux project.

cd $TRD_HOME/apu/vcu_petalinux_bsp/ petalinux-create -t project -s xilinx-vcu-roi-zcu106-v2020.2-final.bsp -

Configure the PetaLinux project and then build it.

cd xilinx-vcu-roi-zcu106-v2020.2-final/ petalinux-config --silentconfig --get-hw-description=$TRD_HOME/pl/build/zcu106_ROI_HDMI/zcu106_ROI_HDMI.xsa/ petalinux-build -

When the build is complete, create a text file called

linux.bif:Copy the following source and paste it into the emptycd $TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux gedit linux.biflinux.biffile, then save the file./* linux */ the_ROM_image: { [bootloader, destination_cpu = a53-0] <zynqmp_fsbl.elf> [pmufw_image] <pmufw.elf> [destination_device=pl] <bitstream> [destination_cpu=a53-0, exception_level=el-3, trustzone] <bl31.elf> [destination_cpu=a53-0, exception_level=el-2] <u-boot.elf> } -

Now we’re going to create directories called

bootandimageand copy some of the build products into those directories:mkdir boot mkdir image cp linux.bif boot/. cp bl31.elf pmufw.elf u-boot.elf zynqmp_fsbl.elf boot/. cp boot.scr Image system.dtb image/.

Vitis build

-

Create a directory that we will use as the Vitis workspace.

mkdir $TRD_HOME/vitis_ws -

Source the settings script for Vitis, then launch Vitis IDE:

source ~/Xilinx/Vitis/2020.2/settings64.sh vitis & - Select the directory we just created as the workspace.

-

Create a new Platform Project by selecting

File -> New -> Platform Projectfrom the menu. -

Name the project

zcu106_dpuand click Next. -

Choose the Create a new platform from hardware (XSA) tab, and then click Browse to select the XSA file.

-

Select the XSA file we just built at

$TRD_HOME/pl/build/zcu106_ROI_HDMI/zcu106_ROI_HDMI.xsa/. -

Select

linuxas the operating system. -

Select

psu_cortexa53as the processor. -

Select

64-bitas the architecture. - Uncheck Generate boot components.

- Click Finish.

-

Select the XSA file we just built at

-

Select

zcu106_dpu -> psu_cortexa53 -> linux on psu_cortexa53and add the required file paths:-

Bif File:

$TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/boot/linux.bif -

Boot Components Directory:

$TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/boot/ -

Linux Image Directory:

$TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/image/

-

Bif File:

- Right click on the zcu106_dpu project and select Build project.

DPU build

-

Clone the Vitis-AI repo to the working directory and apply a patch to add support for the ZCU106 board in the Vitis DPU TRD. Note that we’re going to clone it to a directory called

Vitis-AI-ZCU106in case you will be building the hardware platform for more than one target.cd $WORK git clone https://github.com/Xilinx/Vitis-AI.git Vitis-AI-ZCU106 cd Vitis-AI-ZCU106 git checkout tags/v1.3 -b v1.3 git am $TRD_HOME/dpu/0001-Added-ZCU106-configuration-to-support-DPU-in-ZCU106.patch -

Create an environment variable to store the path of the Vitis-AI DPU TRD.

export DPU_TRD_HOME=$WORK/Vitis-AI-ZCU106/dsa/DPU-TRD -

Source the setup script for XRT. Earlier we sourced the setup script for Vitis, but if you have not done this, you should do so now.

source /opt/xilinx/xrt/setup.sh -

In this step we’re going to replace the

dpu_conf.vhfile of the Vitis-AI DPU TRD, with the one for the ZCU106 VCU HDMI ROI TRD.cp $TRD_HOME/dpu/dpu_conf.vh $DPU_TRD_HOME/prj/Vitis/dpu_conf.vh -

Now we build the hardware design. This step takes a long time.

cd $DPU_TRD_HOME/prj/Vitis export EDGE_COMMON_SW=$TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/ export SDX_PLATFORM=$TRD_HOME/vitis_ws/zcu106_dpu/export/zcu106_dpu/zcu106_dpu.xpfm make KERNEL=DPU DEVICE=zcu106_dpu

Put together the PAC

The Platform Assets Container (PAC) is a collection of boot assets that is required by the xlnx-config snap to load a custom hardware platform in Ubuntu for ZCU10x. The PAC has a defined directory structure which must be respected. The PAC structure for this example is shown below, with added support for the ZCU102 and ZCU104 boards just to illustrate how it would normally be organized:

custom_pac

|

└--hwconfig

|-- custom_cfg -

| |-- manifest.yaml

| |-- zcu102 --

| | |-- bl31.elf

| | |-- bootgen.bif

| | |-- dpu.xclbin

| | |-- zynqmp_fsbl.elf

| | |-- pmufw.elf

| | |-- system.bit

| | └-- system.dtb

| |-- zcu104 --

| | |-- bl31.elf

| | |-- bootgen.bif

| | |-- dpu.xclbin

| | |-- zynqmp_fsbl.elf

| | |-- pmufw.elf

| | |-- system.bit

| | └-- system.dtb

| └-- zcu106 --

| |-- bl31.elf

| |-- bootgen.bif

| |-- dpu.xclbin

| |-- zynqmp_fsbl.elf

| |-- pmufw.elf

| |-- system.bit

| └-- system.dtb

|

|-- <config_2>

.

.

└-- <config_N>

Note that our actual PAC will have support for the ZCU106 only, so the zcu102 and zcu104 directories will not be there. Also, folders config_2 and config_N are only there to demonstrate that we can also have multiple “configurations” in a single PAC, although ours will only have one configuration called “custom_cfg”.

To put the PAC together, follow these instructions:

-

Use the following commands to create the PAC directory structure and copy over the required ZCU106 build products:

mkdir -p $WORK/custom_pac/hwconfig/custom_cfg/zcu106 cp $DPU_TRD_HOME/prj/Vitis/binary_container_1/sd_card/dpu.xclbin $WORK/custom_pac/hwconfig/custom_cfg/zcu106/. cp $DPU_TRD_HOME/prj/Vitis/binary_container_1/sd_card/system.dtb $WORK/custom_pac/hwconfig/custom_cfg/zcu106/. cp $DPU_TRD_HOME/prj/Vitis/binary_container_1/link/int/system.bit $WORK/custom_pac/hwconfig/custom_cfg/zcu106/. cp $TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/bl31.elf $WORK/custom_pac/hwconfig/custom_cfg/zcu106/. cp $TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/pmufw.elf $WORK/custom_pac/hwconfig/custom_cfg/zcu106/. cp $TRD_HOME/apu/vcu_petalinux_bsp/xilinx-vcu-roi-zcu106-v2020.2-final/images/linux/zynqmp_fsbl.elf $WORK/custom_pac/hwconfig/custom_cfg/zcu106/.What about U-Boot? If you noticed, we haven’t put anyu-boot.elffile in the PAC. This is because we need to use the one that is built into the Ubuntu image (the “golden” copy) located at/usr/lib/u-boot/xilinx_zynqmp_virt/u-boot.elf. We don’t need to copy it into our PAC either, we just need to link to it from thebootgen.biffile which we do next. -

Now create the

bootgen.biffile with this command:gedit $WORK/custom_pac/hwconfig/custom_cfg/zcu106/bootgen.bif -

Copy and paste the following text into the

bootgen.biffile and then save the file.the_ROM_image: { [bootloader, destination_cpu=a53-0] /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106/zynqmp_fsbl.elf [pmufw_image] /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106/pmufw.elf [destination_device=pl] /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106/system.bit [destination_cpu=a53-0, exception_level=el-3, trustzone] /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106/bl31.elf [destination_cpu=a53-0, load=0x00100000] /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106/system.dtb [destination_cpu=a53-0, exception_level=el-2] /usr/lib/u-boot/xilinx_zynqmp_virt/u-boot.elf } -

Now create the

manifest.yamlfile with this command:gedit $WORK/custom_pac/hwconfig/custom_cfg/manifest.yaml -

Copy and paste the following text into the

manifest.yamlfile and then save the file.name: custom_platform description: Boot assets for custom platform revision: 1 assets: zcu106: zcu106 -

Now we want to compress the PAC so that it is easily transferred over to the ZCU106.

cd $WORK zip -r custom_pac.zip custom_pac

Install the PAC on the ZCU106

To go through these steps you will need to have gone through the previous post. Once you have your ZCU106 setup and running with Ubuntu 20.04 LTS, we can install the PAC onto it.

- Power up the ZCU106 and login to Ubuntu 20.04 LTS.

-

Open a terminal window

Ctrl-Alt-T. -

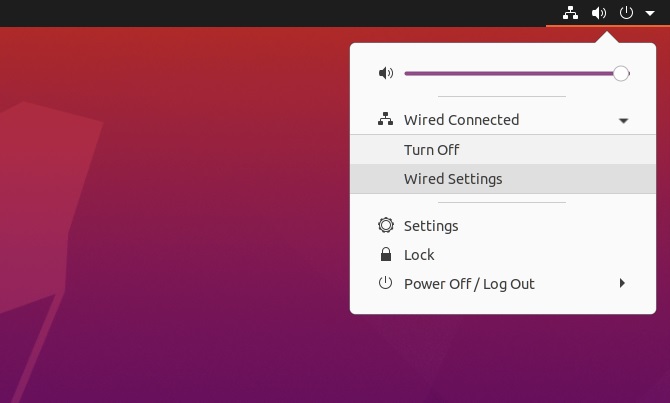

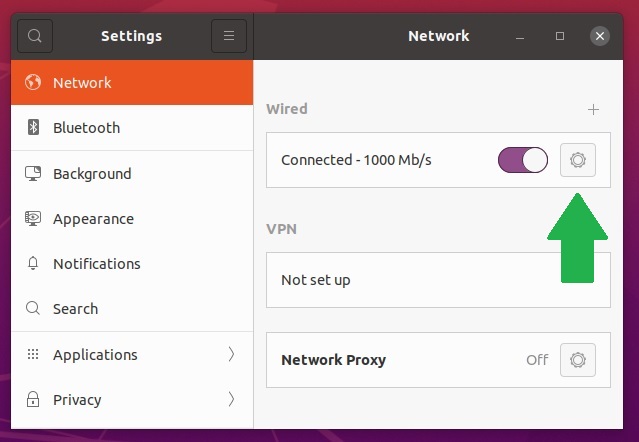

We will need to get the IP address of the ZCU106. This can be done from a terminal window by running the command:

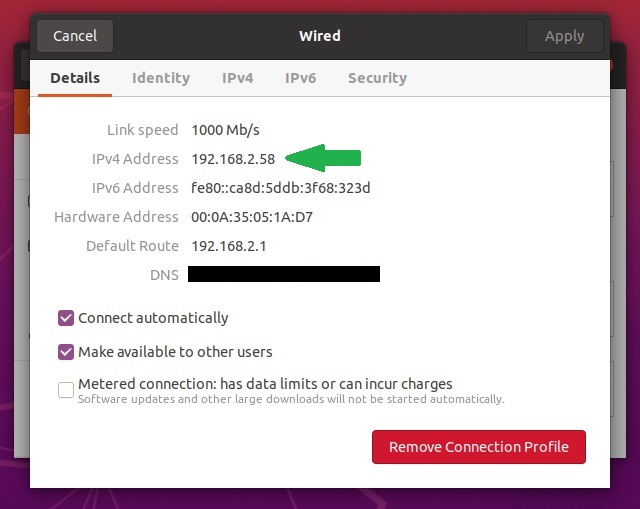

hostname -I. Alternatively it can be done in the GUI, by following these instructions. Click on the network icon in the top right corner of the screen.

-

Click on the gear icon for the wired network.

-

The IP address of your board will be shown under “IPv4 Address”.

-

Now that we have the IP address of the ZCU106, we can transfer the compressed file containing our PAC from our desktop PC to the ZCU106. At this point you should switch over to your desktop PC and run these commands FROM THE DESKTOP PC (change the IP address to the one you found in the previous step):

You will be asked if you trust the connection (answer YES) and then for the password of the

cd $WORK scp custom_pac.zip ubuntu@192.168.2.58:~/.ubuntuuser. The file will then be transferred over to the ZCU106 and into the home directory. -

Now switch back over to the ZCU106 Ubuntu 20.04 LTS, and run these commands to extract the PAC into the

/usr/local/share/xlnx-configdirectory:Thecd ~ sudo mkdir -p /usr/local/share/xlnx-config sudo unzip custom_pac.zip -d /usr/local/share/xlnx-config/usr/local/share/xlnx-configdirectory does not exist when you first boot Ubuntu, so we need to create it before being able to copy PACs into it. PACs can go into that directory, or alternatively they can go into/boot/firmware/xlnx-config/. -

We have now manually installed the PAC on the ZCU106, and we should check that it was done correctly by running

xlnx-config -q. The results should be the following:If you get error messages at this point, one thing to look out for is that theubuntu@zynqmp:~$ xlnx-config -q Hardware Platforms Present in the System: | PAC Cfg |Act| zcu106 Assets Directory --------------------------------------------------------------------------------------------------------------- | custom_platform | | /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106 --------------------------------------------------------------------------------------------------------------- * No configuration is currently activated *manifest.yamlfile is correctly formated. Remember that indentation is meaningful in YAML files, and you should use spaces rather than tabs, and 2 spaces per indentation level. You also need to make sure that the property names are correctly spelt. If you copy and paste my example above, you should not have any issues. -

If the PAC is correctly installed and you get the output shown above, then we are ready to activate the PAC. Run the command

sudo xlnx-config -a custom_platformand you should see the following result:ubuntu@zynqmp:~$ sudo xlnx-config -a custom_platform Activating assets for custom_platform on the zcu106 * Generating boot binary... * Updating Multi-boot register * Updating /var/lib/xlnx-config/active_board with zcu106 IMPORTANT: Please reboot the system for the changes to take effect. -

As the previous message indicated, we need to reboot the ZCU106 for the new platform to be loaded. Note that this is not a dynamic load like is possible with the Kria, we actually need to reboot for the new bitstream and hardware platform to be loaded. To reboot, run

sudo reboot now. -

When Ubuntu is back up, we can open a terminal window

Ctrl-Alt-Tand runxlnx-config -qagain to make sure that the platform we built is actually loaded and active. You should get this output, showing that the platform that we installed is active:ubuntu@zynqmp:~$ xlnx-config -q Hardware Platforms Present in the System: | PAC Cfg |Act| zcu106 Assets Directory --------------------------------------------------------------------------------------------------------------- | custom_platform | * | /usr/local/share/xlnx-config/custom_pac/hwconfig/custom_cfg/zcu106 ---------------------------------------------------------------------------------------------------------------

sudo xlnx-config -d. Again, you will need to reboot for the changes to take effect.Try it out

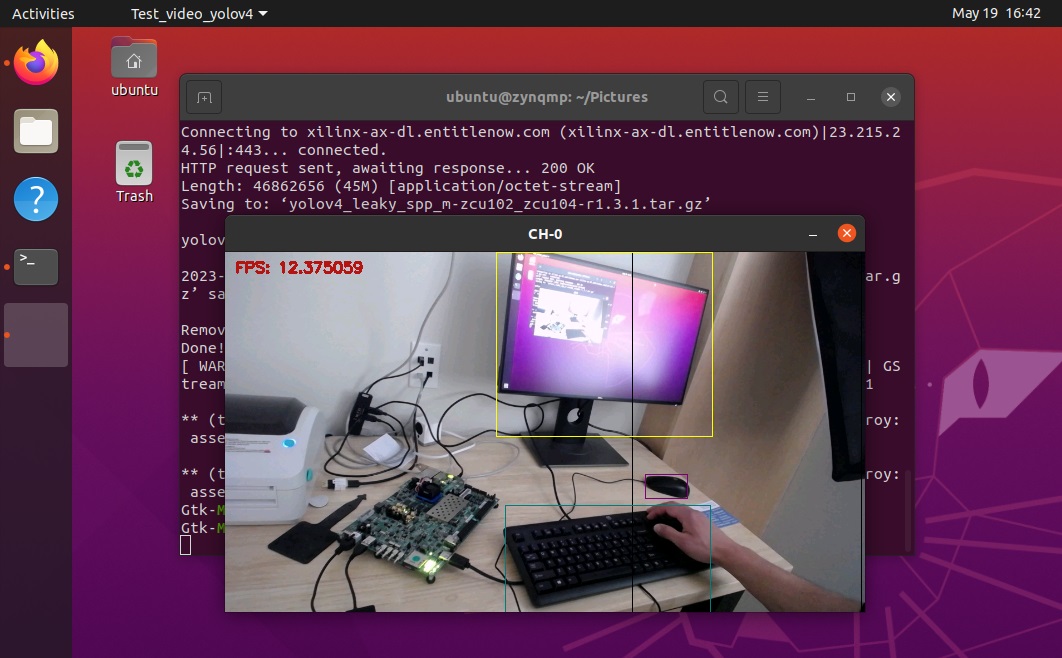

Like we did in the previous post, I encourage you to try out the Vitis AI Library Sample Applications to make sure that the newly built hardware platform is working as expected. We didn’t modify anything in the design, so it should have exactly the same functionality as the original.

Try this out:

- Plug a USB webcam into the USB port of the ZCU106.

-

In Ubuntu on the ZCU106, open a terminal window by pressing

Ctrl-Alt-T. -

If you haven’t already done this, install the

xlnx-vai-lib-samplessnap like this:sudo snap install xlnx-vai-lib-samples -

Run the YOLOv4 application and see what it can recognize:

The last argument (

xlnx-vai-lib-samples.test-video yolov4 yolov4_leaky_spp_m 22) is there to select the camera device at/dev/video2, so you might need to set it to the correct one if it doesn’t work for you.

In the next post we’ll modify the hardware platform so that we can run these applications on the RPi Camera FMC! Have a great weekend!