This is the second part of a three part tutorial series in which we will create a PCI Express Root Complex design in Vivado with the goal of connecting a PCIe NVMe solid-state drive to our FPGA.

In this second part of the tutorial series, we will build a Zynq based design targeting the PicoZed 7Z030 and PicoZed FMC Carrier Card V2. In part 3, we will then test the design on the target hardware by running a stand-alone application which will validate the state of the PCIe link and perform enumeration of the PCIe end-points. We will then run PetaLinux on the FPGA and prepare our SSD for use under the operating system.

Requirements

To complete this tutorial you will need the following:

Note: The tutorial text and screenshots are suitable for Vivado 2015.4 however the sources in the Git repository will be regularly updated to the latest version of Vivado.

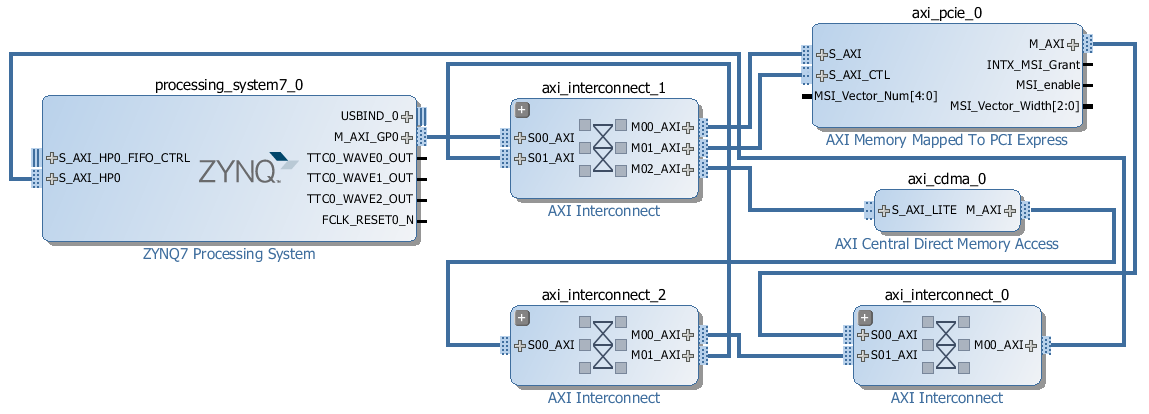

Design Overview

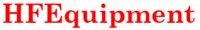

The diagram below shows the block design we are about to build with only the AXI interfaces showing. It shows three main elements: the Zynq PS, the AXI to PCIe bridge and the AXI CDMA. If you went through the previous tutorial where we created the same design for a Microblaze system, you may be wondering why the Zynq design seems so much simpler. The reason is that a lot of the elements required in this design are hidden in the Zynq PS block, including the DDR3 memory controller, UART, Ethernet, Interrupt controller, Timer and QSPI.

So again let’s look at who the bus masters are and what address spaces they can access:

-

the Zynq PS can access both the DDR3 memory and the PCIe address space

-

the PCIe end-point with bus mastering capability can access the DDR3 memory only (via M_AXI port of the AXI-PCIe bridge)

-

the CDMA can access both the DDR3 memory and the PCIe address space

Install PicoZed board definition files

The first thing we have to do is provide the PicoZed board definition files to our Vivado installation so that the PicoZed will show up in the list of targets when we create a new project. The board definition files contain information about the hardware on the target board and also on how the Zynq PS should be configured in order to properly connect to that hardware.

-

Download the PicoZed board definition files for Vivado 2015.4 from the PicoZed documentation page.

-

From inside the ZIP file, copy the folder

picozed_7030_fmc2 into the folder C:\Xilinx\Vivado\2015.4\data\boards\board_files of your Vivado installation.

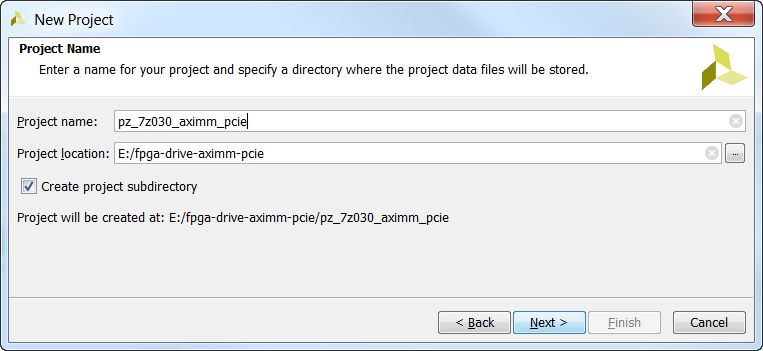

Create a new Vivado project

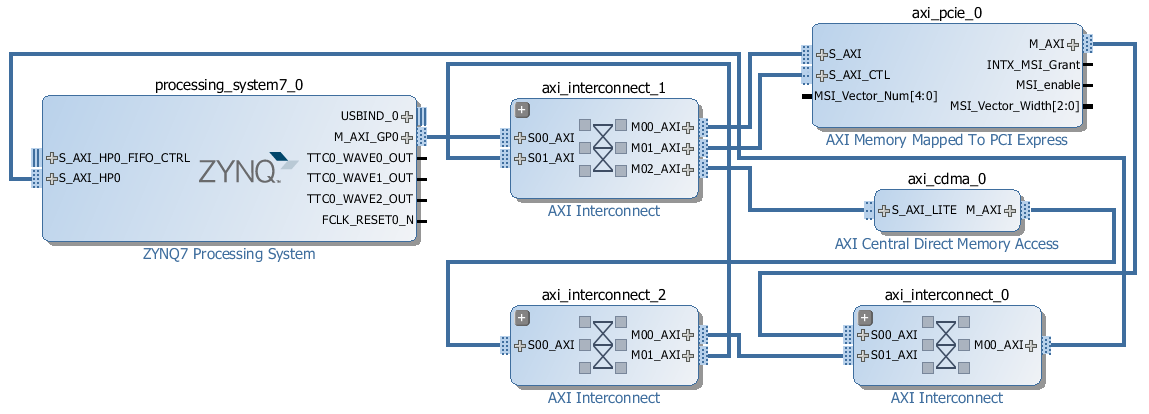

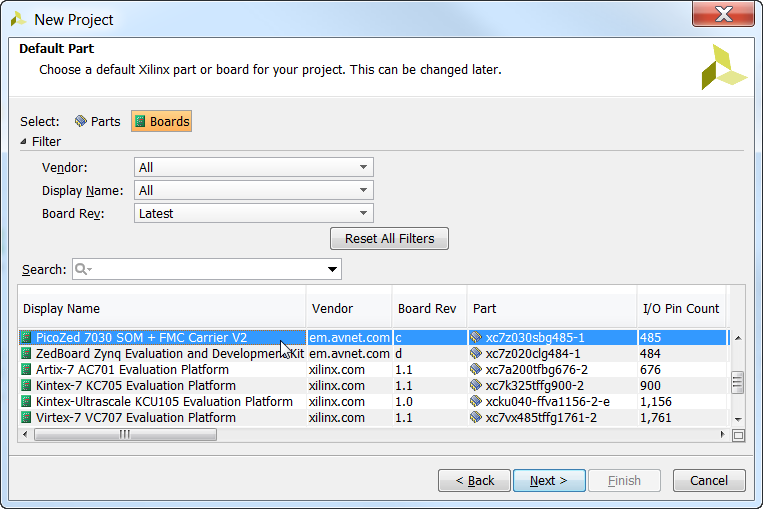

Let’s kick off the design by creating a new project in Vivado and selecting the PicoZed 7Z030 as our target.

-

From the welcome screen, click “Create New Project”. Specify a folder for the project. I’ve created a folder named “kc705_aximm_pcie”. Click “Next”.

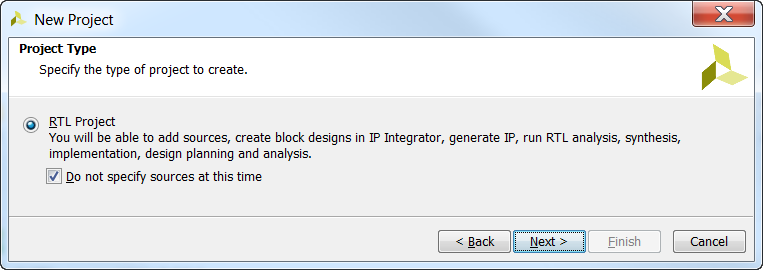

-

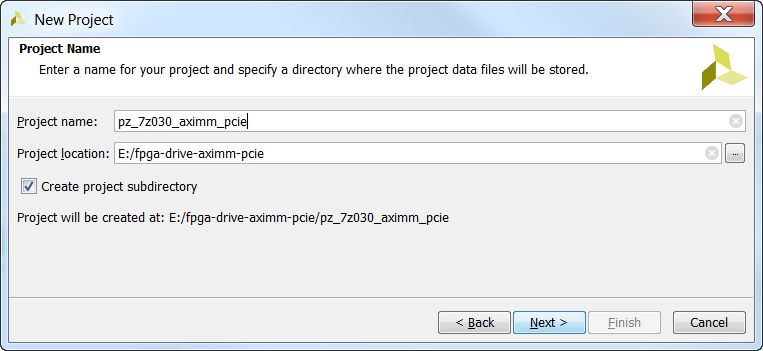

For the Project Type window, choose “RTL Project” and tick “Do not specify sources at this time”. Click “Next”.

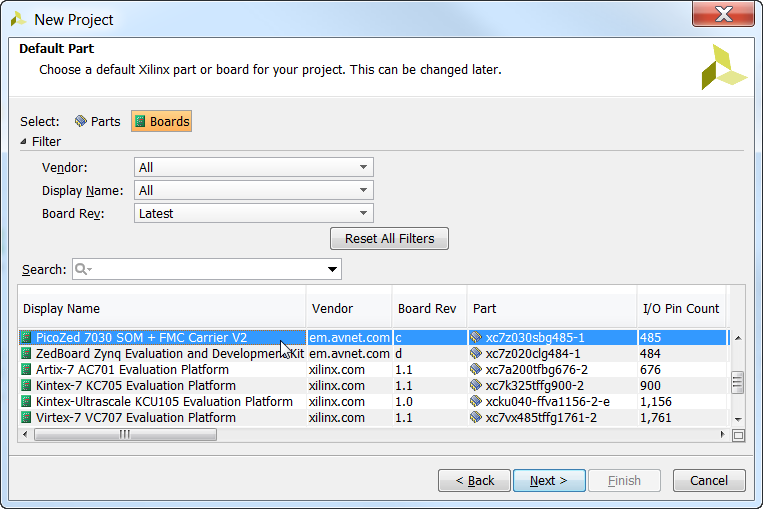

-

For the Default Part window, select the “Boards” tab and then select the “PicoZed 7030 SOM + FMC Carrier V2” and click “Next”.

-

Click “Finish” to complete the new project wizard.

Create the block design

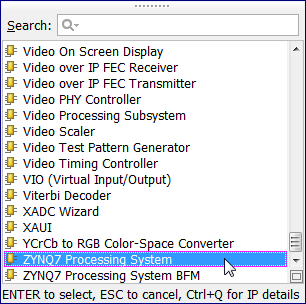

In the following steps, we’ll create the block design then add the Zynq PS and the AXI Memory Mapped PCI Express Bridge.

-

From the Vivado Flow Navigator, click “Create Block Design”.

-

Specify a name for the block design. Let’s go with the default “design_1” and leave it local to the project. Click “OK”.

-

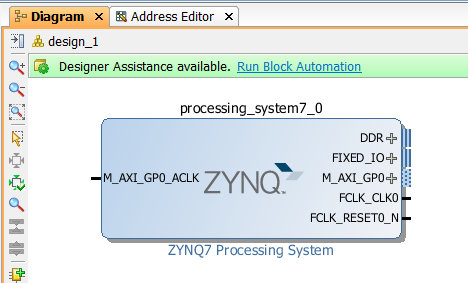

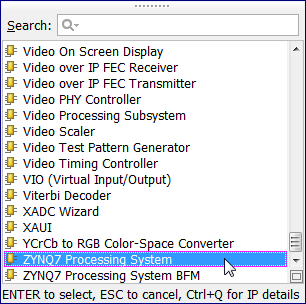

Once the empty block design opens, click on the “Add IP” icon. The IP catalog will appear. Find and double click on “ZYNQ7 Processing System”.

-

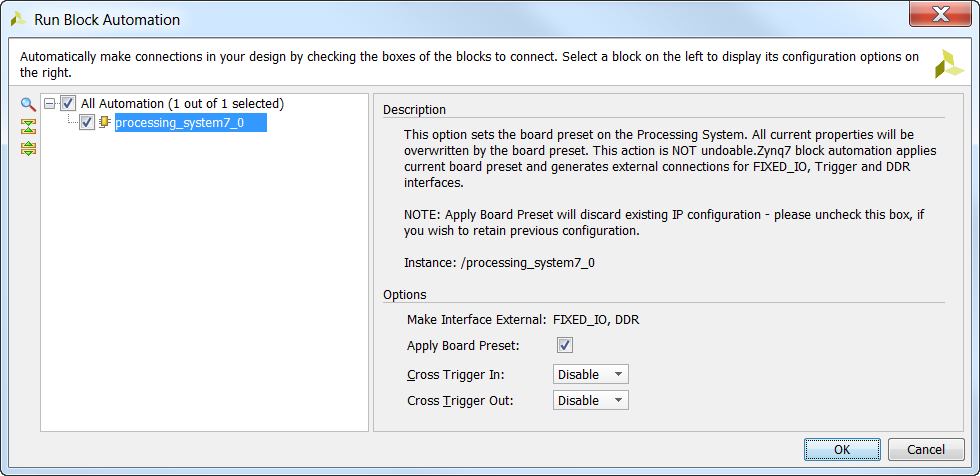

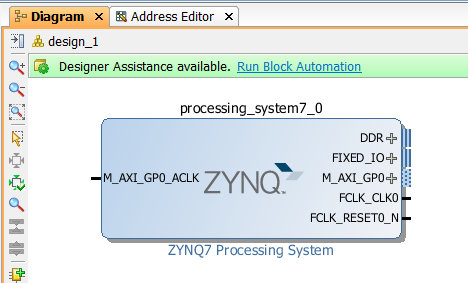

The Zynq PS block will be added to the block design. Click Run Block Automation to configure the Zynq PS for our target hardware.

-

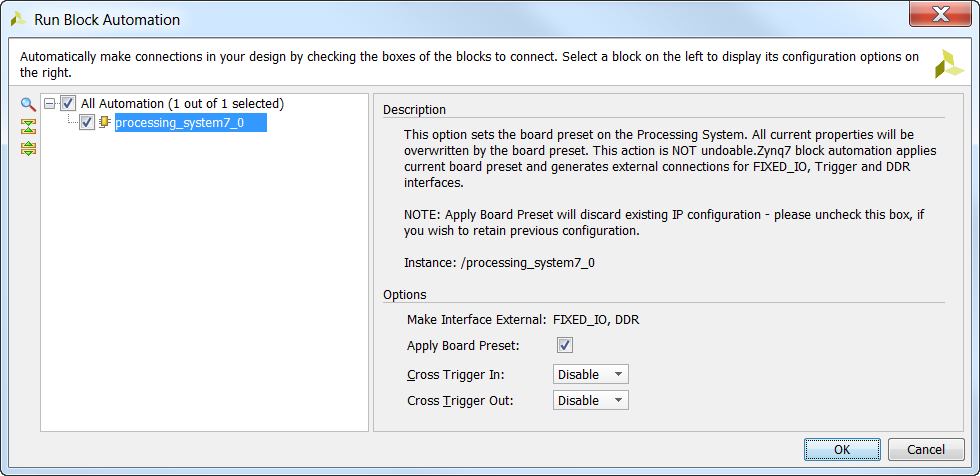

Use the default block automation settings.

-

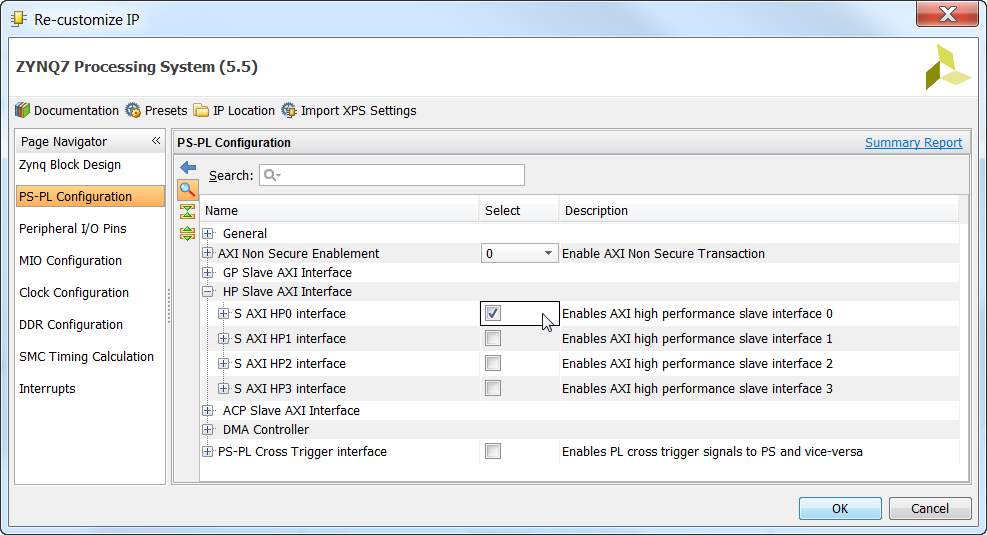

Now double click on the Zynq PS to configure it.

-

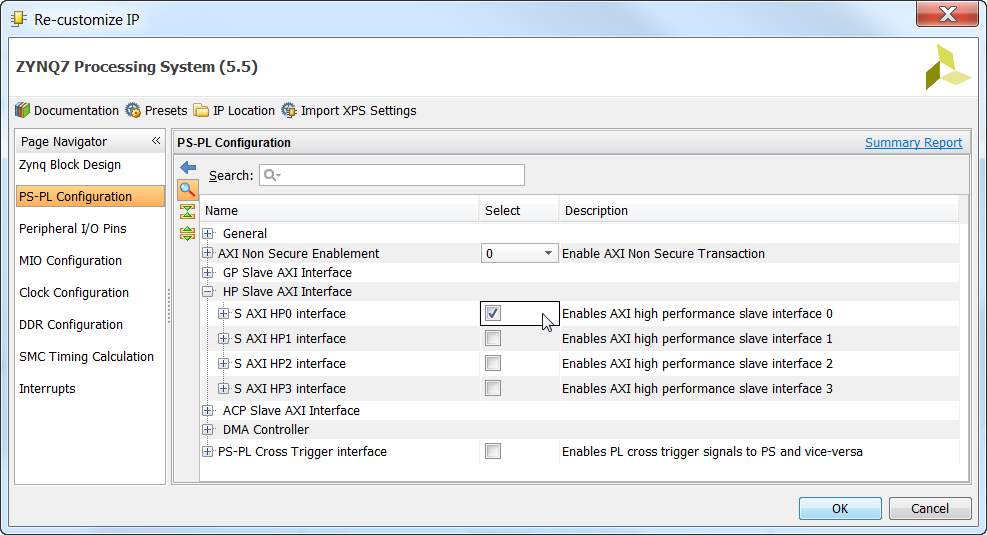

In the PS-PL Configuration tab, enable HP Slave AXI interface (S AXI HP0 interface). HP stands for high-performance and this port allows an AXI master to access the DDR3 memory.

-

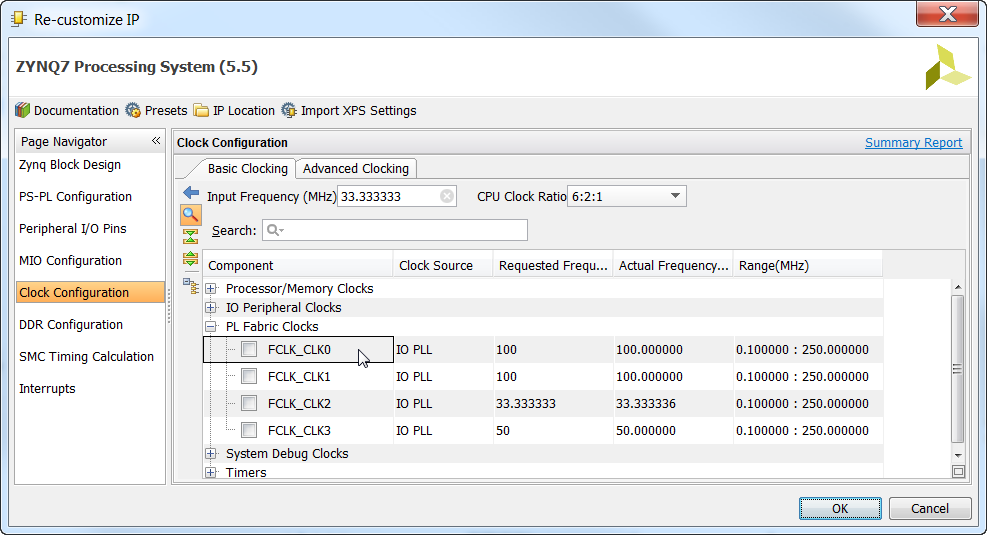

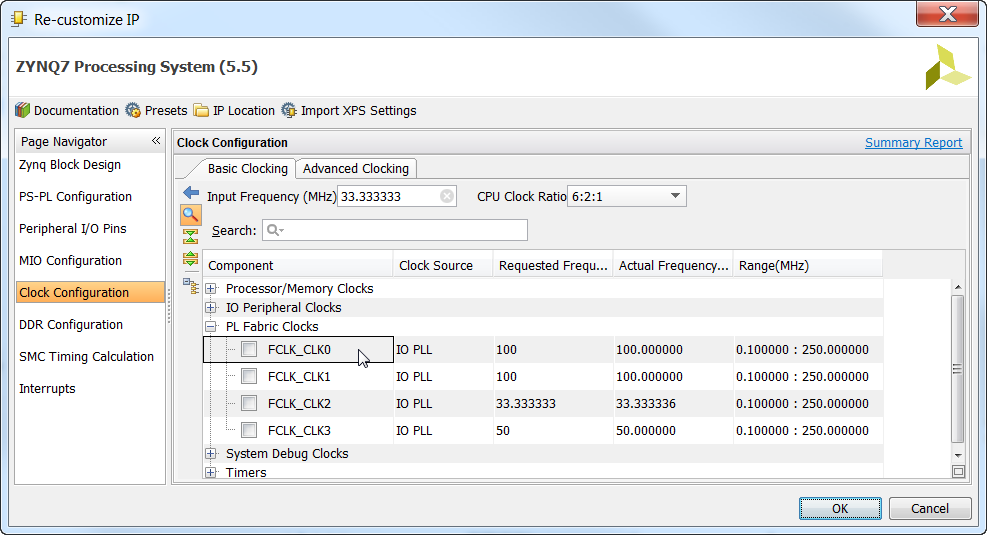

In the Clock Configuration tab, disable the PL Fabric Clock FCLK_CLK0, because we won’t be needing it. Instead, most of our design will be driven by the clock supplied by the AXI-PCIe bridge, which is derived from the 100MHz PCIe reference clock.

-

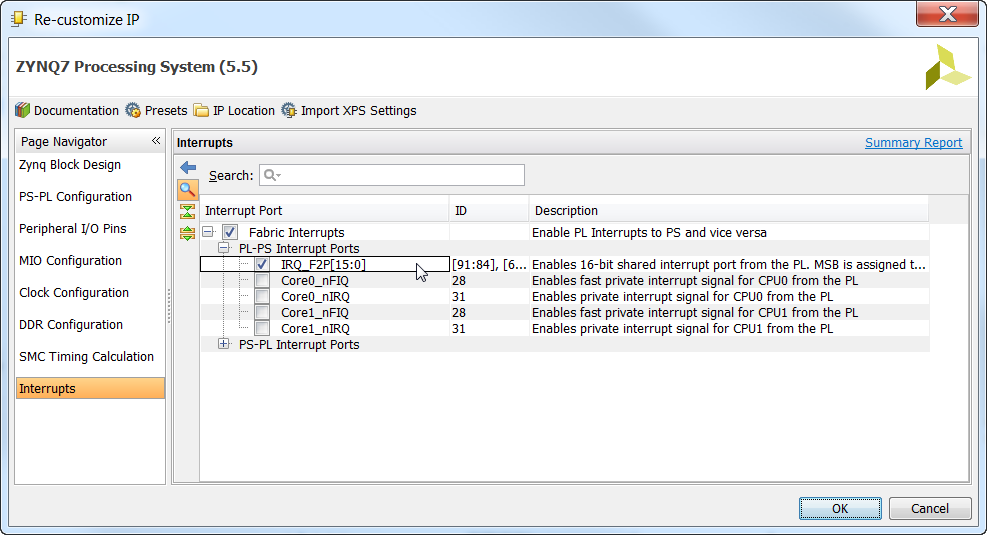

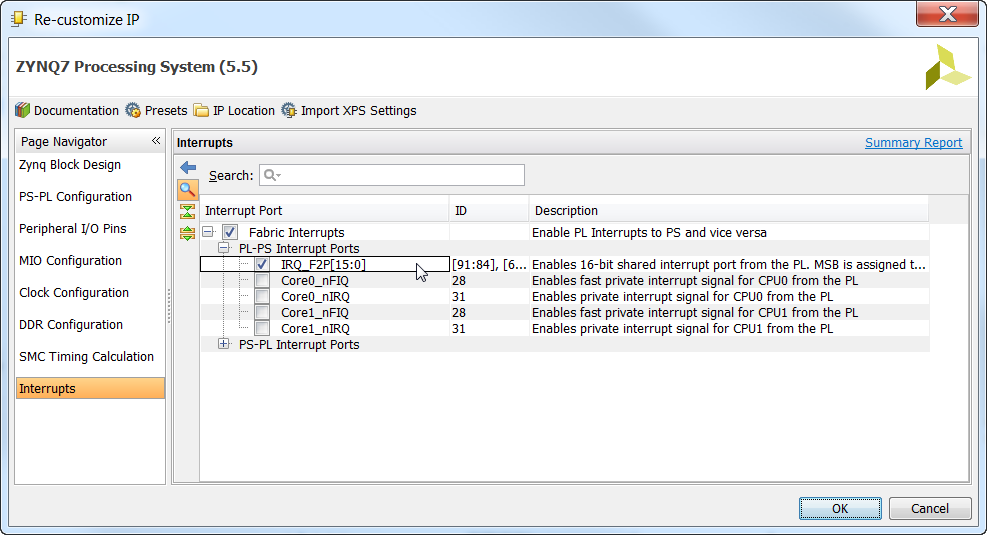

In the Interrupts tab, enable Fabric Interrupts, IRQ_F2P. This allows us to connect interrupts from our PL (programmable logic) to the Zynq PS.

-

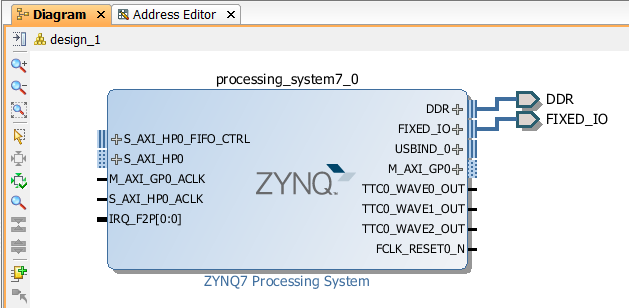

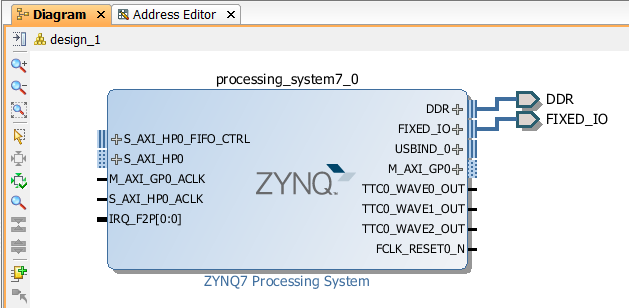

The Zynq block should look like in the image below.

Add the AXI MM to PCIe bridge

-

From the IP Catalog, add the “AXI Memory Mapped to PCI Express” block to the design.

-

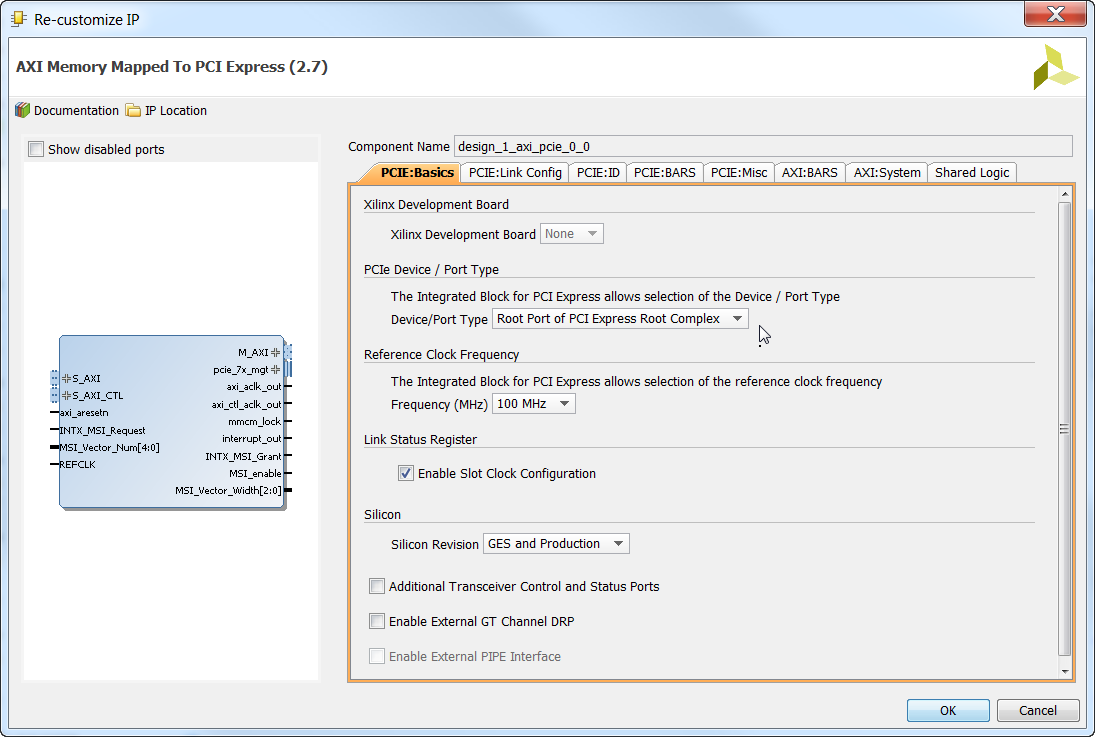

When the AXI-PCIe block is in the block design, double click on it to configure it.

-

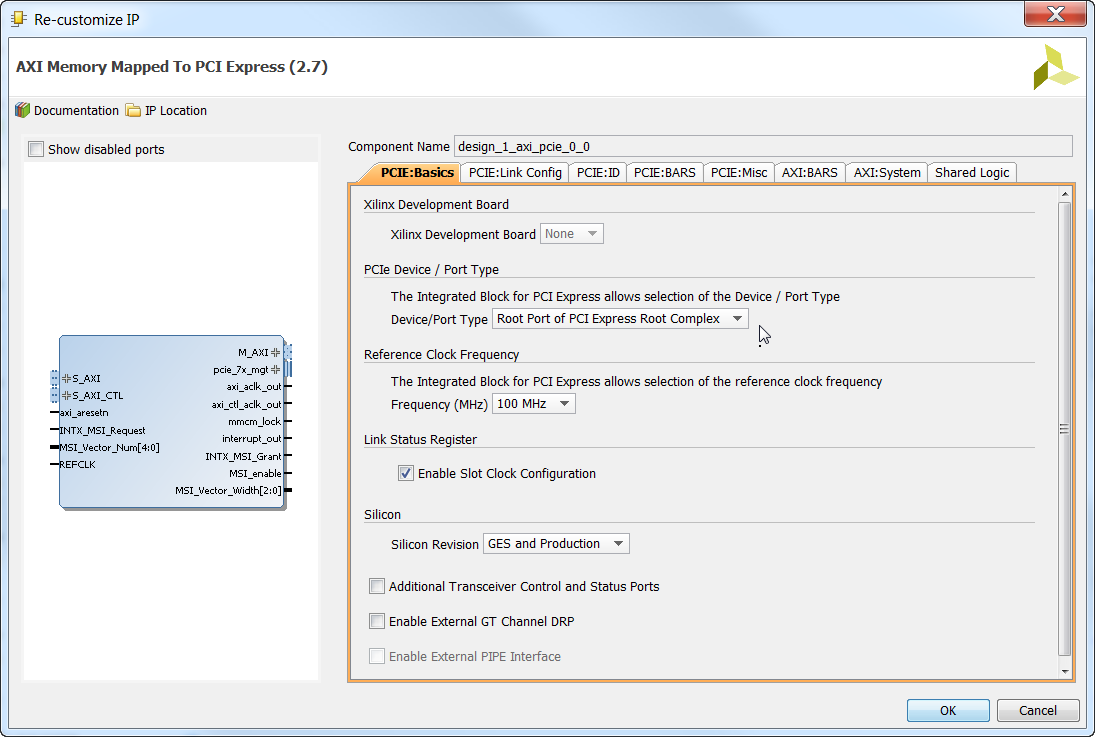

On the “PCIE:Basics” tab of the configuration, select “Root Port of PCI Express Root Complex” as the port type.

-

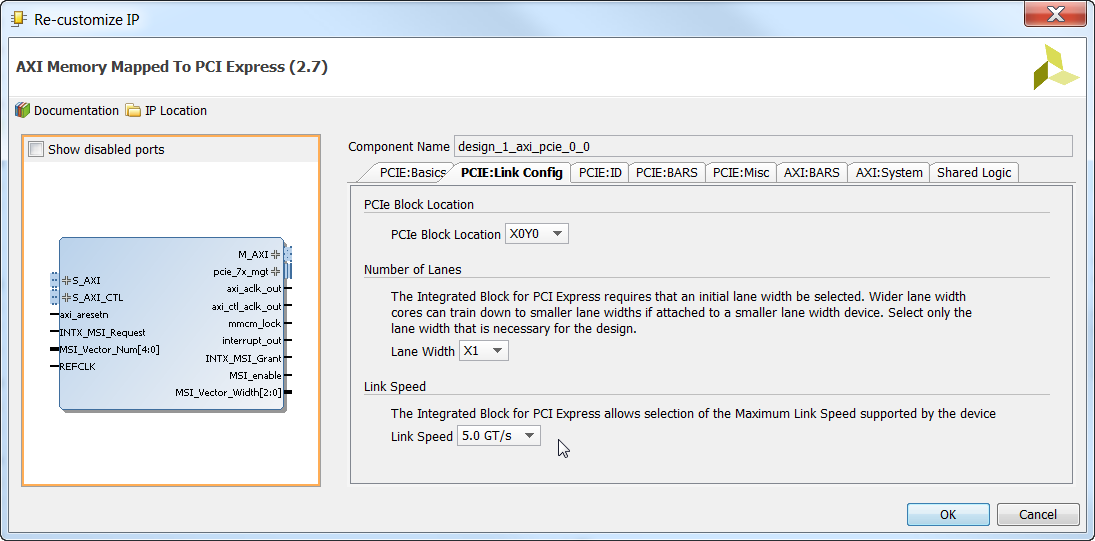

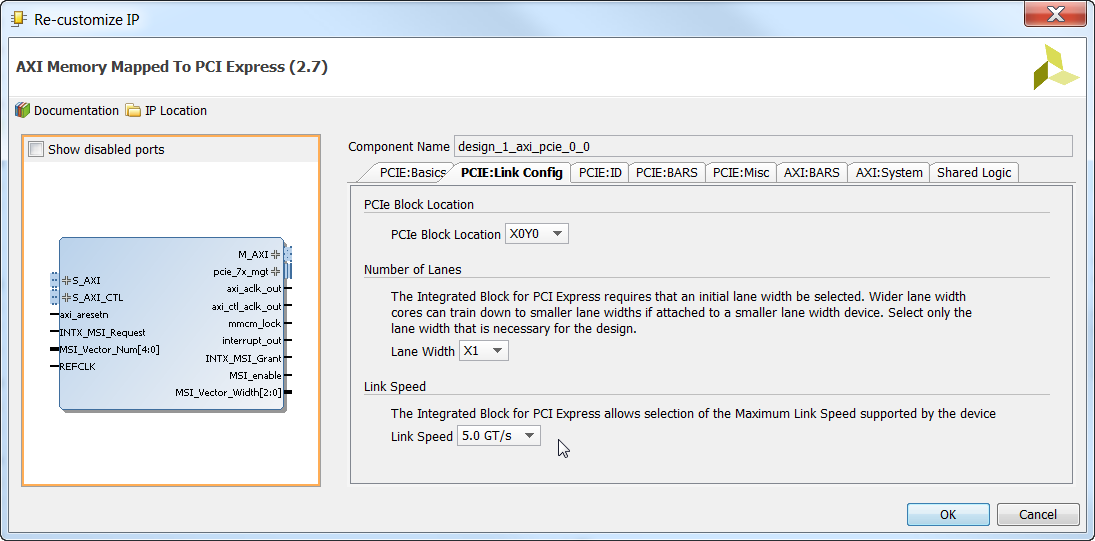

On the “PCIE:Link Config” tab, select a “Lane Width” of 1x and a “Link speed” of 5 GT/s (Gen2). We plan to connect to a 4-lane NVMe PCIe SSD in the next part of this tutorial, but the target hardware only has a single-lane PCIe edge connector.

-

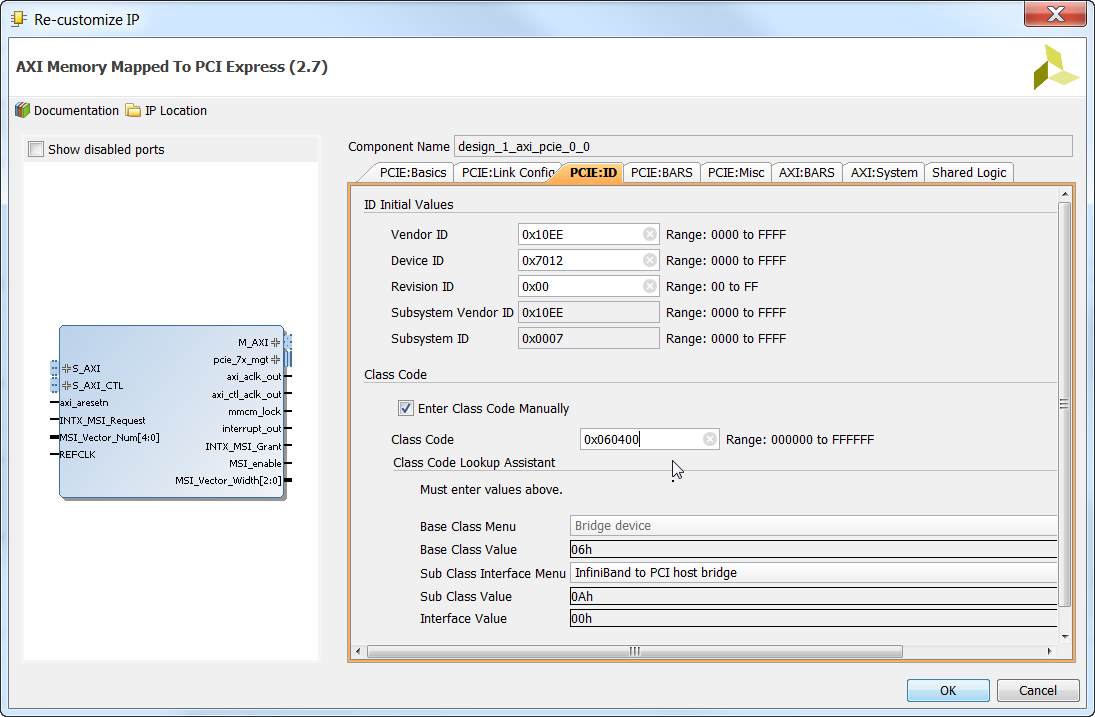

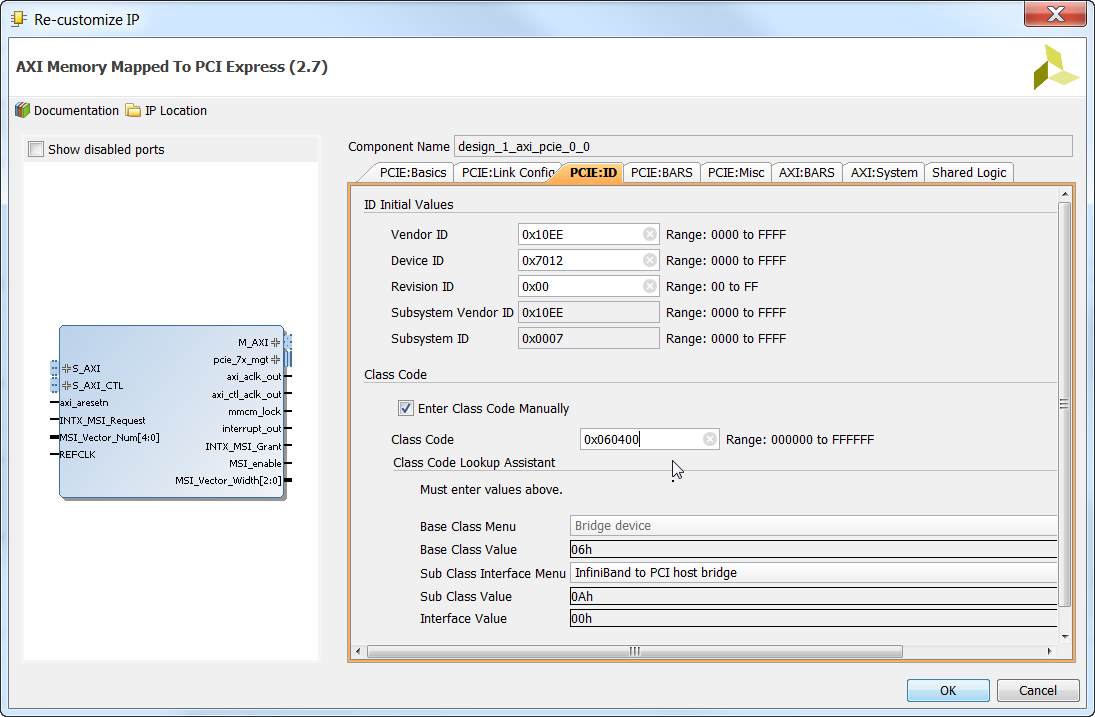

In the “PCIE:ID” tab, enter a “Class Code” of 0x060400. This is important for the next tutorial, in which we will be running PetaLinux. The class code will ensure that the correct driver is associated with the AXI to PCIe bridge IP.

-

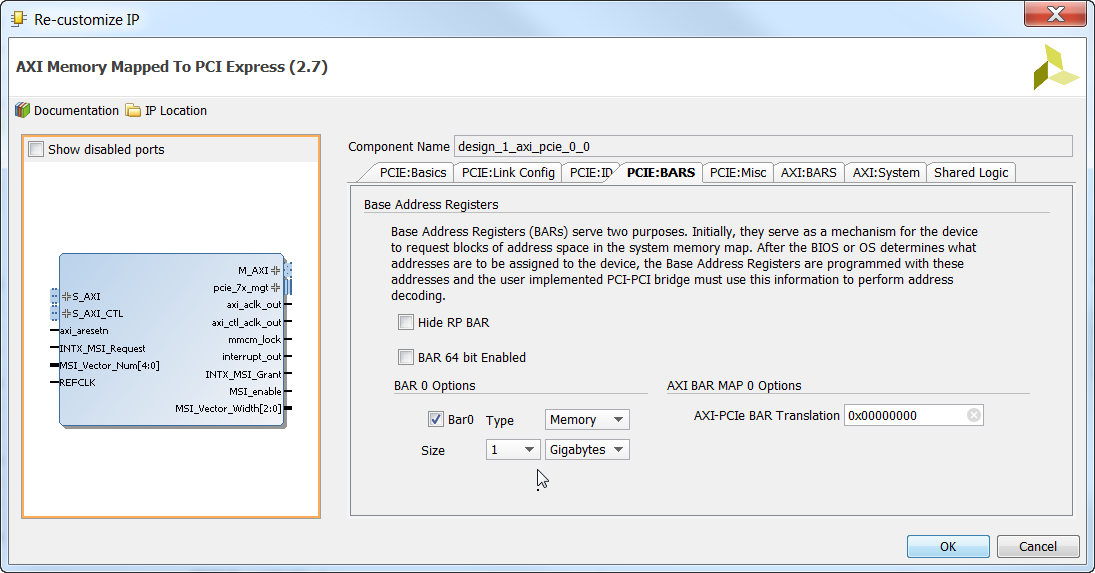

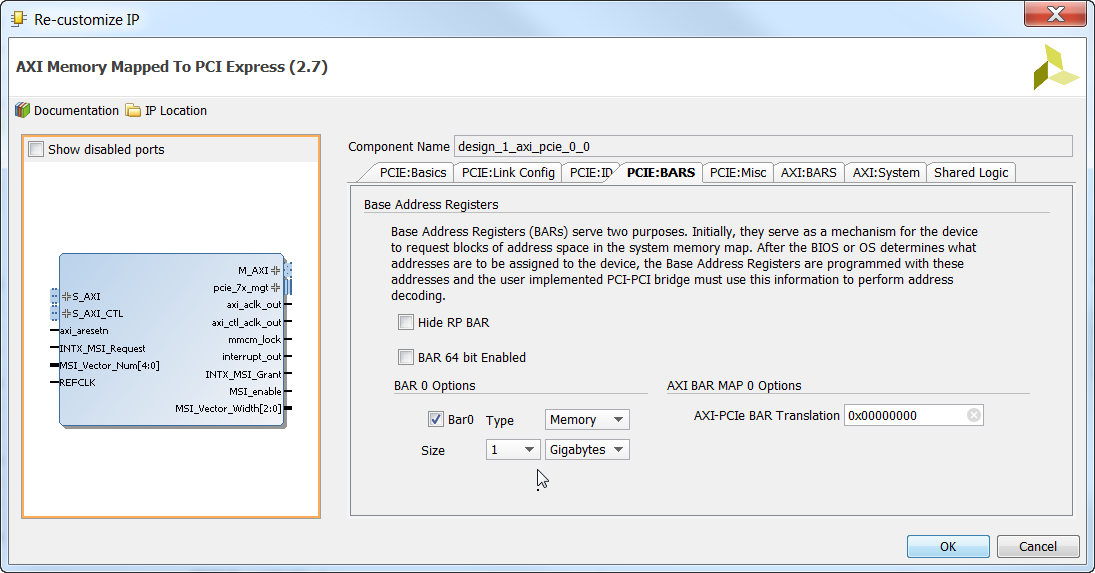

In the “PCIE:BARS” tab, set BAR 0 with type “Memory” and a size of 1 Gigabytes.

-

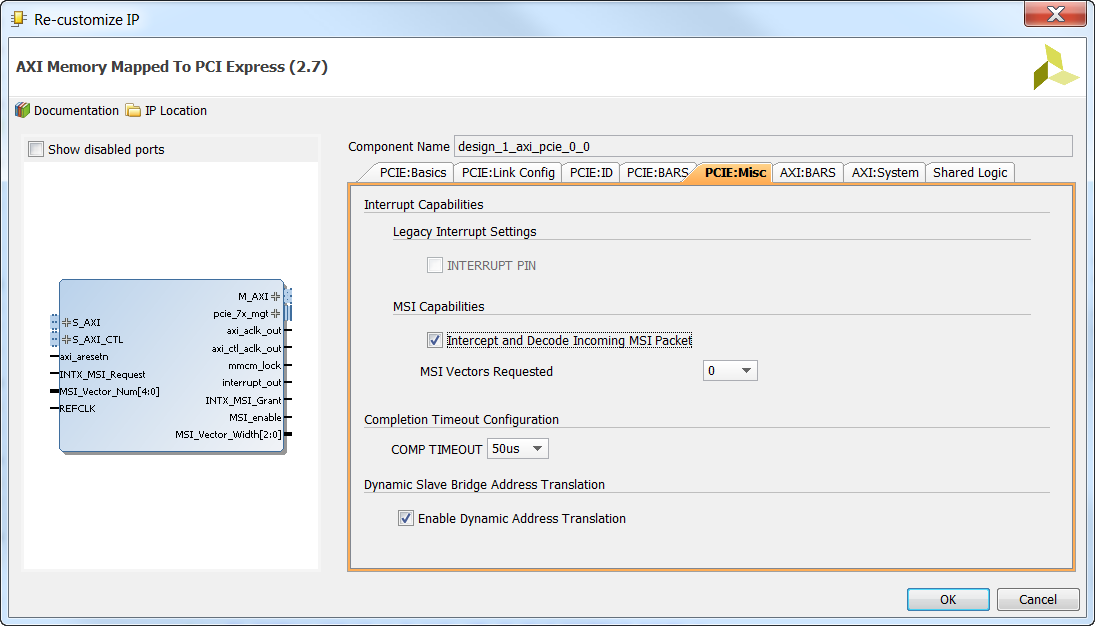

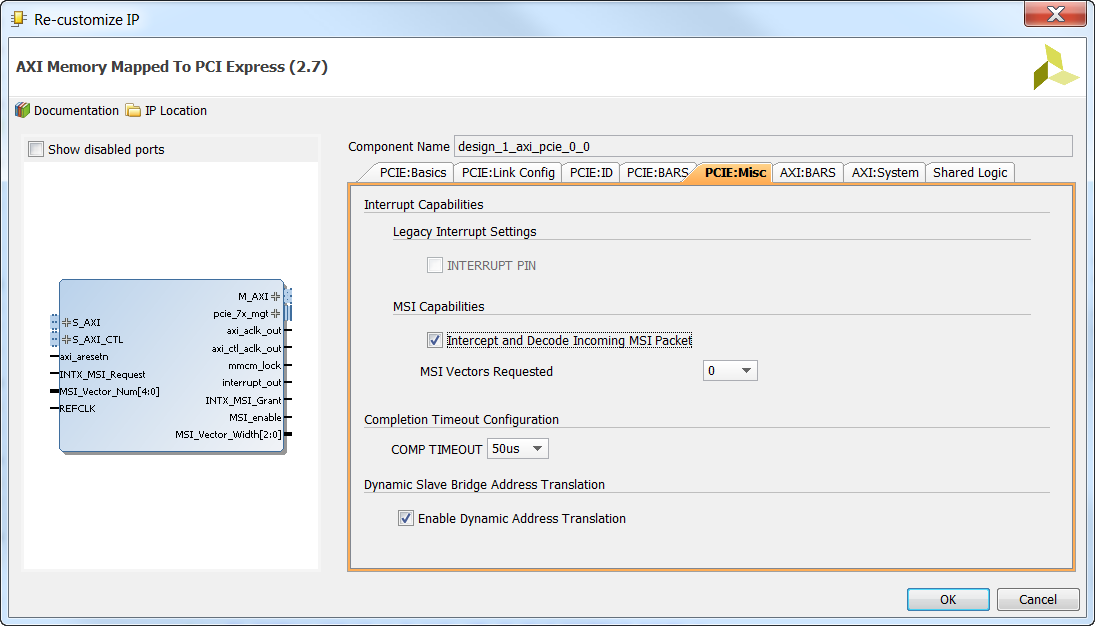

In the “PCIE:Misc” tab, use the defaults as shown in the image below.

-

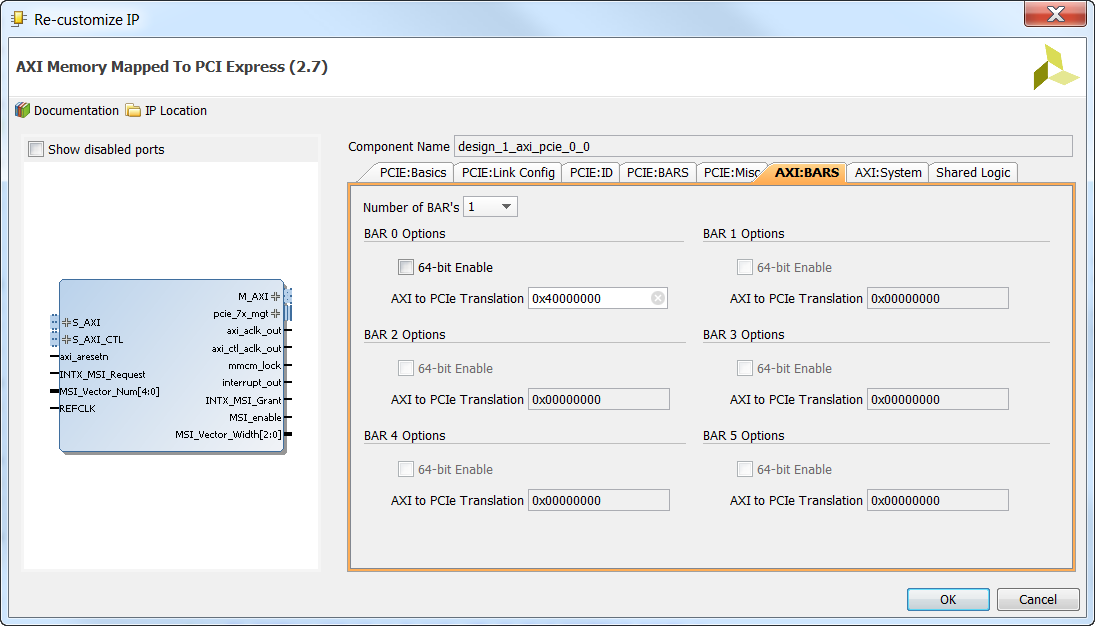

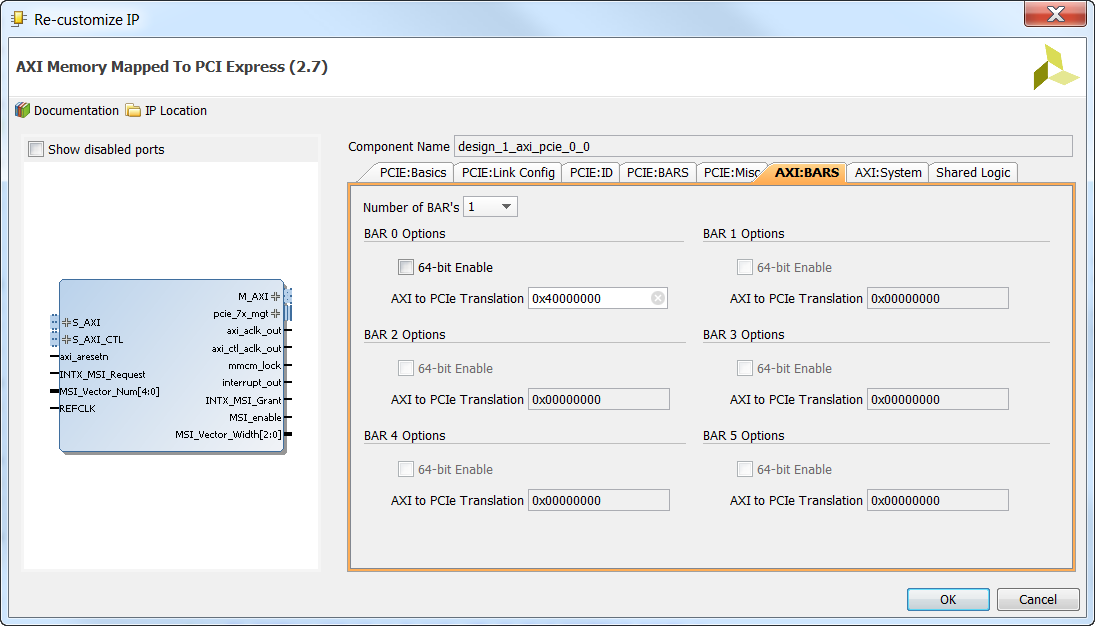

In the “AXI:BARS” tab, use the defaults as shown in the image below. We will later be able to configure the size of the AXI BAR 0 in the Address Editor.

-

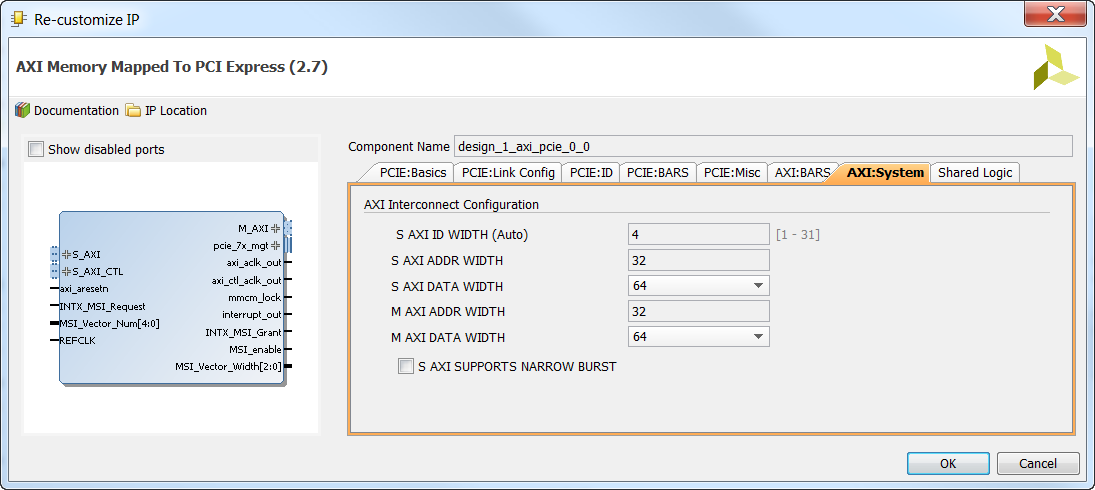

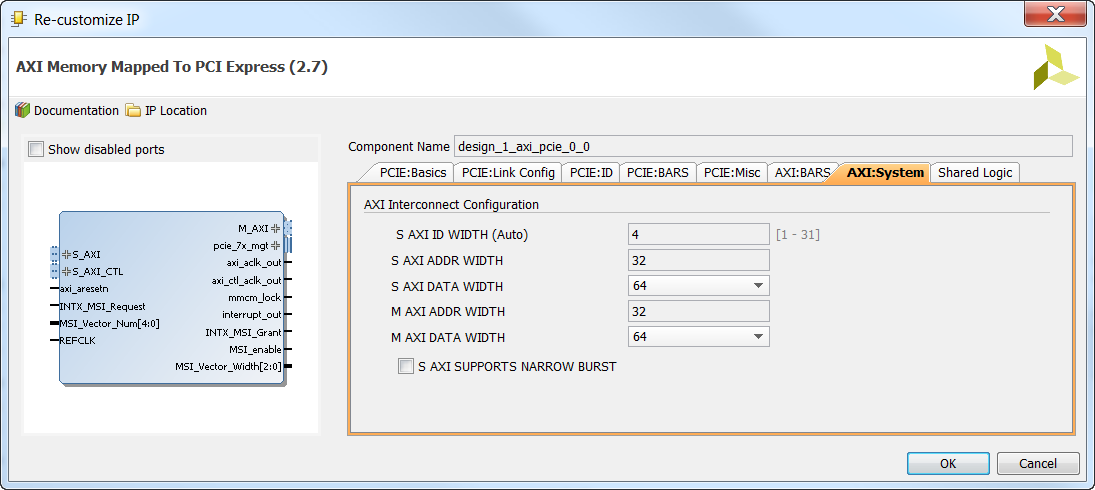

In the “AXI:System” tab, use the defaults as shown in the image below.

-

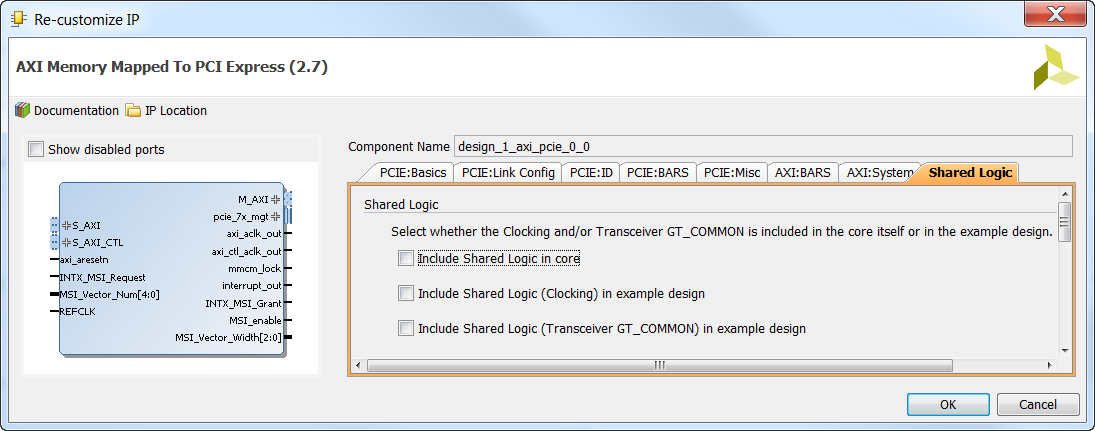

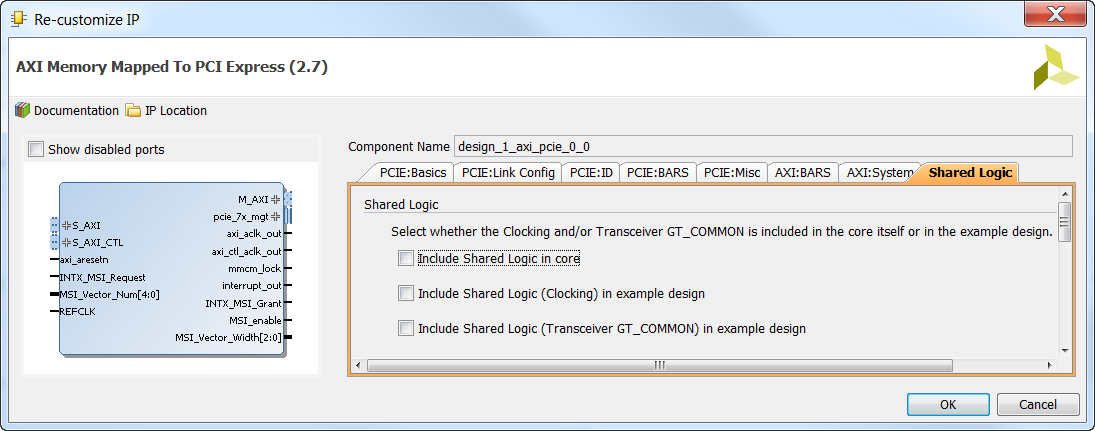

In the “Shared Logic” tab, use the defaults as shown in the image below. Click “OK”.

-

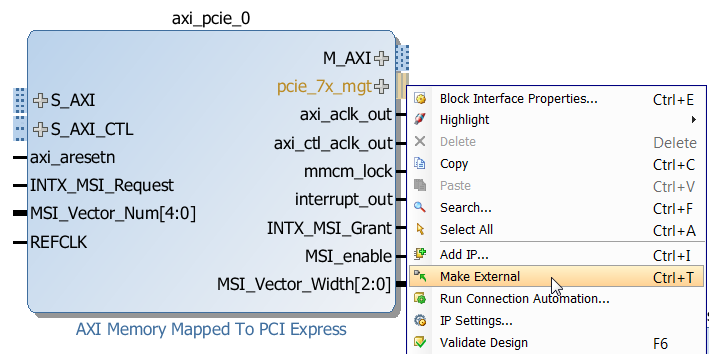

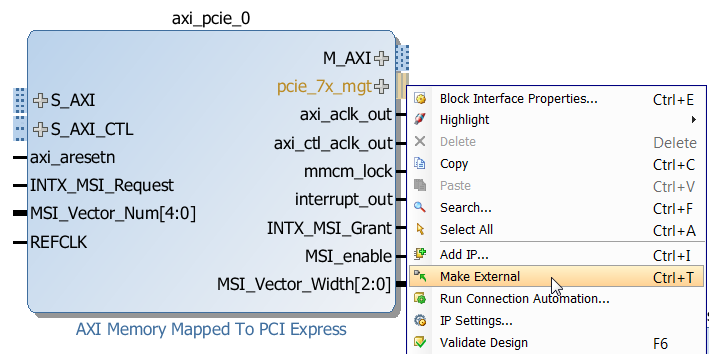

Right click on the “pcie_7x_mgt” port of the AXI-PCIe block and select “Make External”. This will connect the gigabit transceiver to the 1-lane PCIe edge-connector of the PicoZed 7030 SOM + FMC Carrier V2.

-

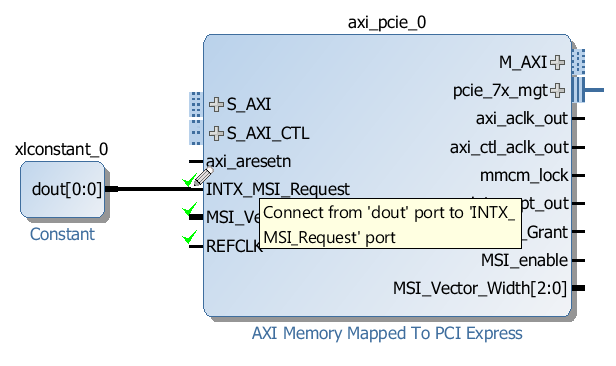

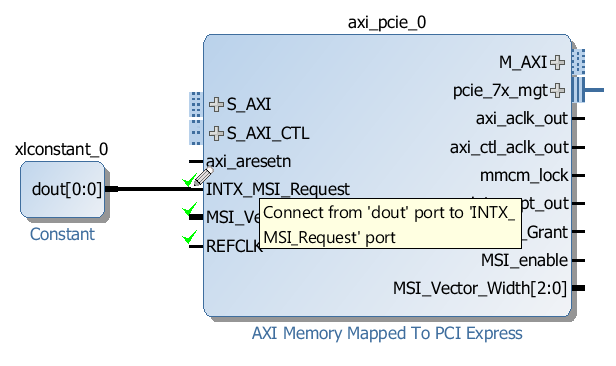

Add a “Constant” from the IP Catalog and configure it to output 0 (low). We’ll use this to tie low the “INTX_MSI_Request” input of the AXI-PCIe block. Connect the constant’s output to the “INTX_MSI_Request” input of the AXI-PCIe block.

-

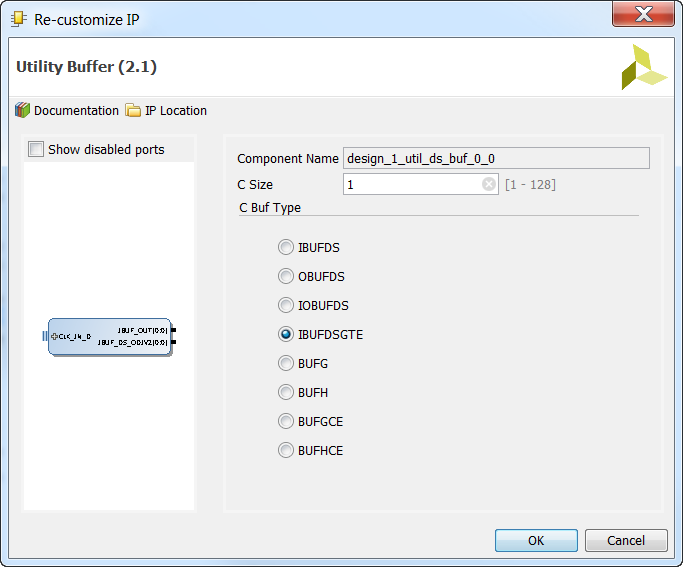

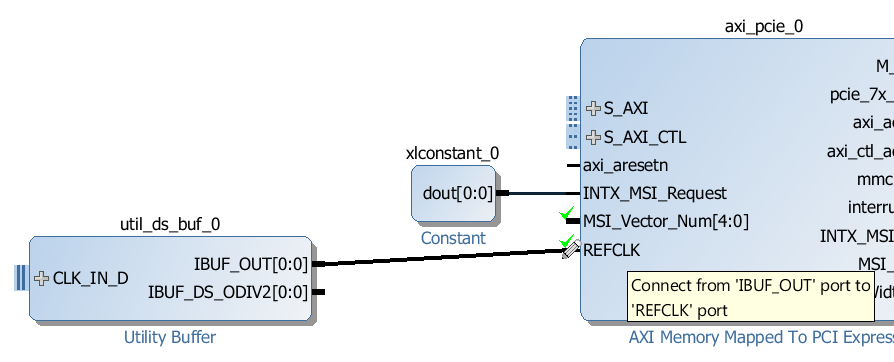

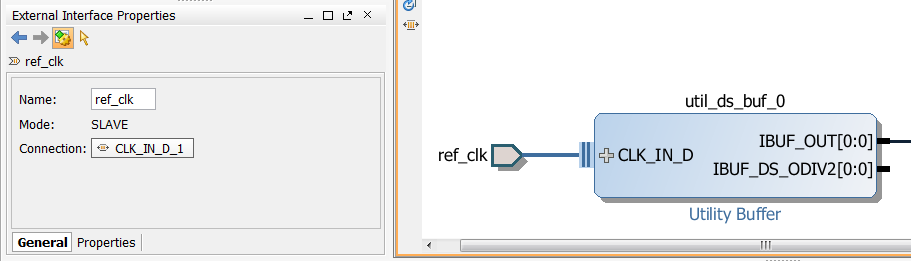

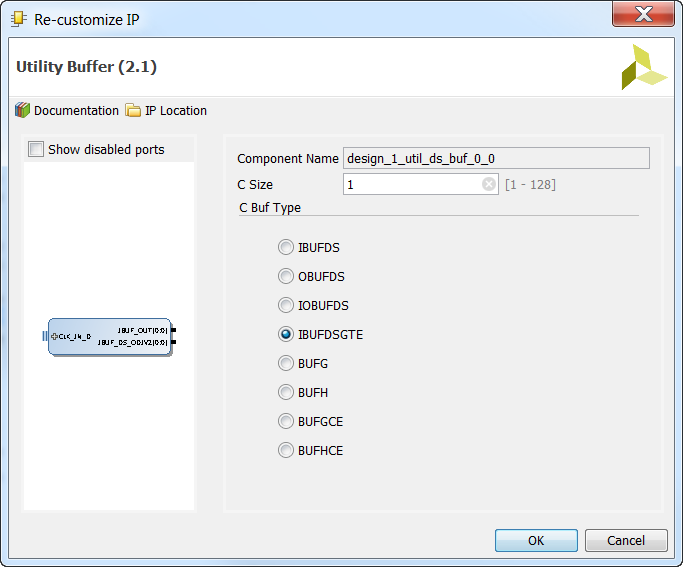

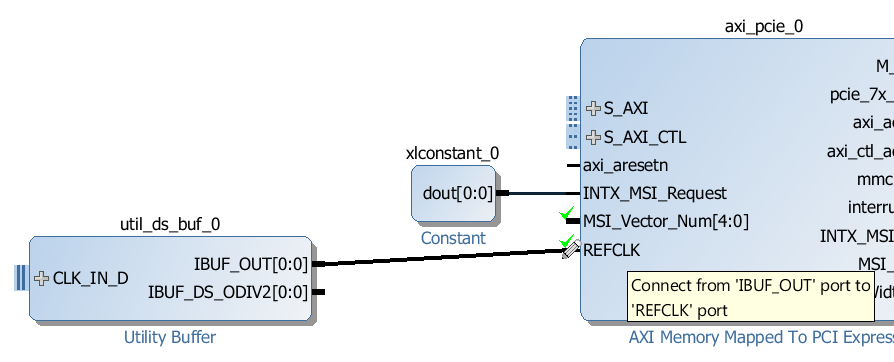

Add a “Utility Buffer” to the block design. This buffer is going to be connected to a 100MHz clock that will be provided to the PicoZed 7030 SOM + FMC Carrier V2 by the FPGA Drive adapter, via the PCIe edge-connector. A 100MHz reference clock is required by all PCIe devices. Double click on the utility buffer and on the “Page 0” tab of the configuration window, select “IBUFDSGTE” as the C Buf Type. Click “OK”.

-

Connect the “IBUF_OUT” output of the utility buffer to the “REFCLK” input of the AXI-PCIe block.

-

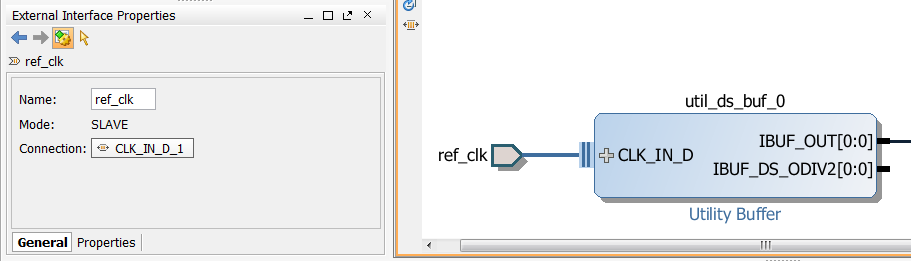

Right click on the “CLK_IN_D” input of the utility buffer and select “Make External”, then change the name of the created external port to “ref_clk” using the External Interface Properties window.

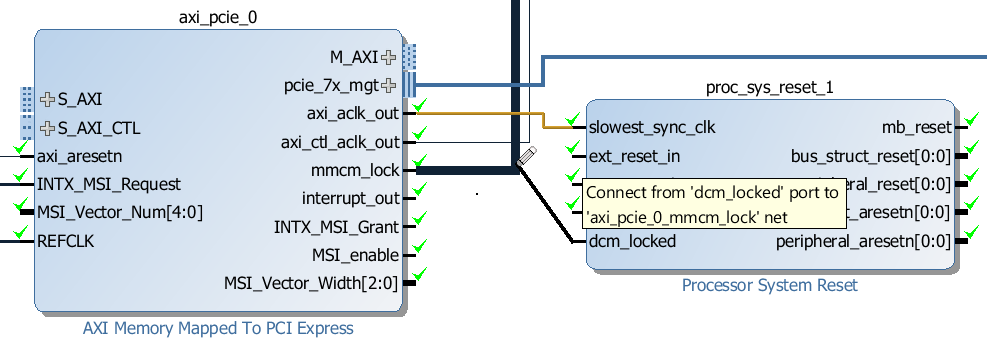

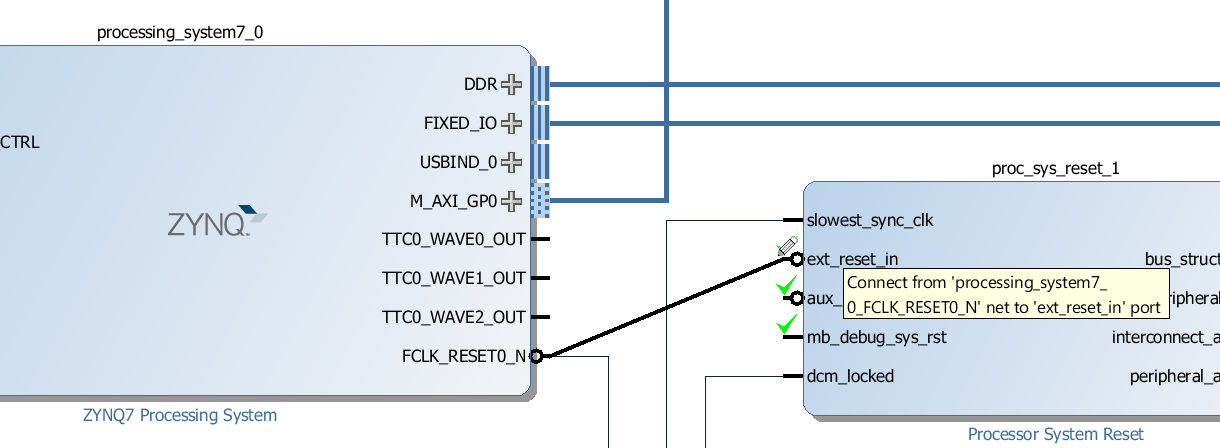

Add the Processor System Resets

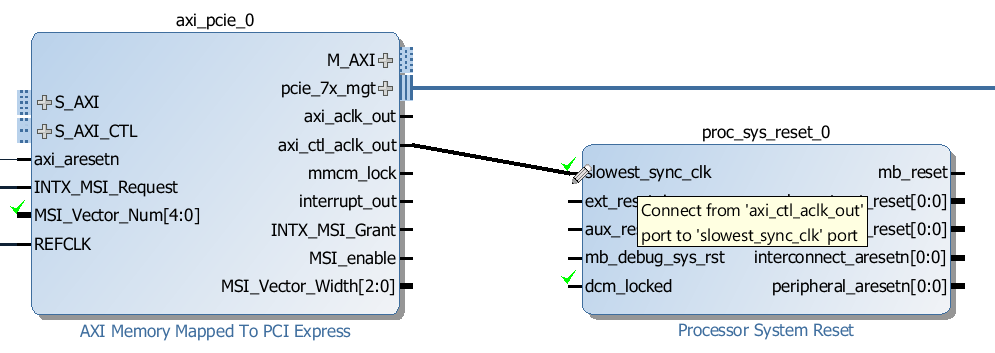

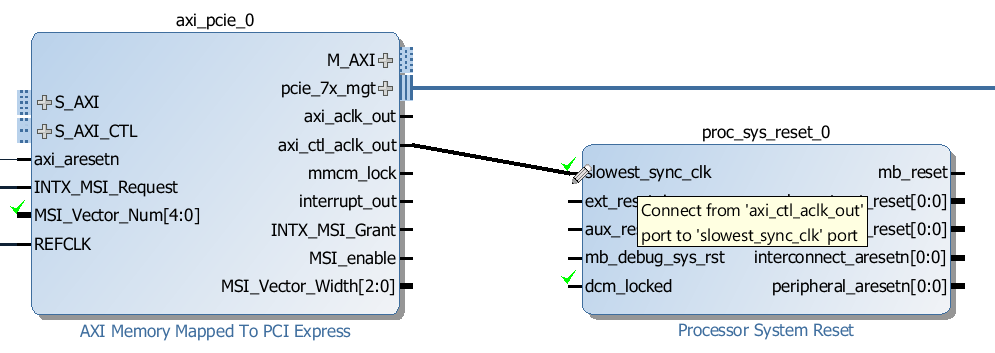

Our design will be using the two clocks supplied by the AXI-PCIe bridge: axi_aclk_out and axi_ctl_aclk_out. We will need to add a Processor System Reset to generate resets for each of those clocks.

-

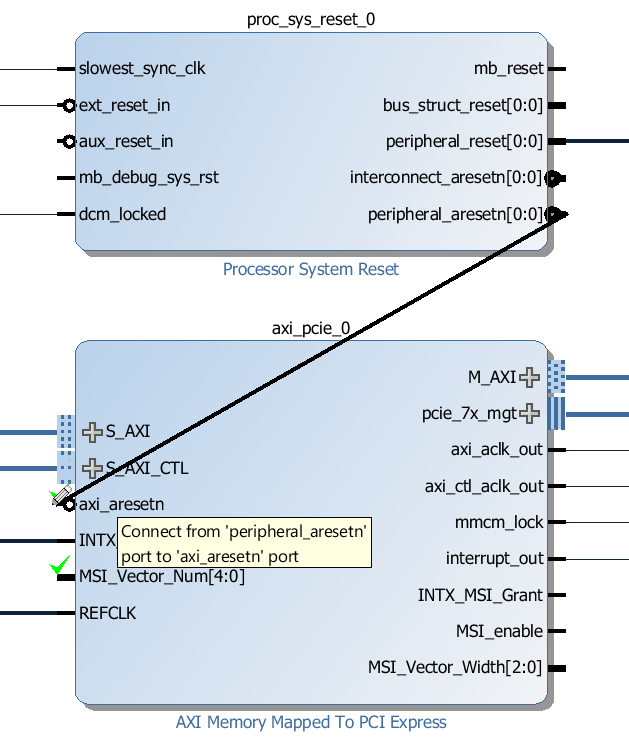

From the IP Catalog, add a “Processor System Reset” to the design - this one should automatically be called proc_sys_reset_0.

-

Connect the axi_ctl_aclk_out output of the AXI-PCIe block to the slowest_sync_clk input of the proc_sys_reset_0 Processor System Reset.

-

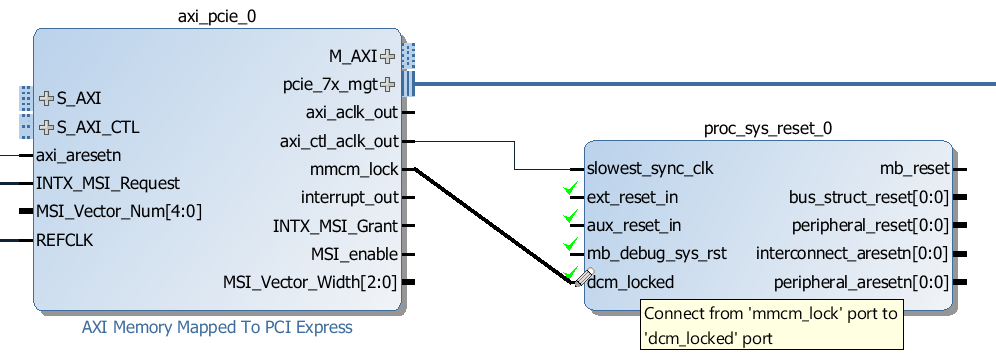

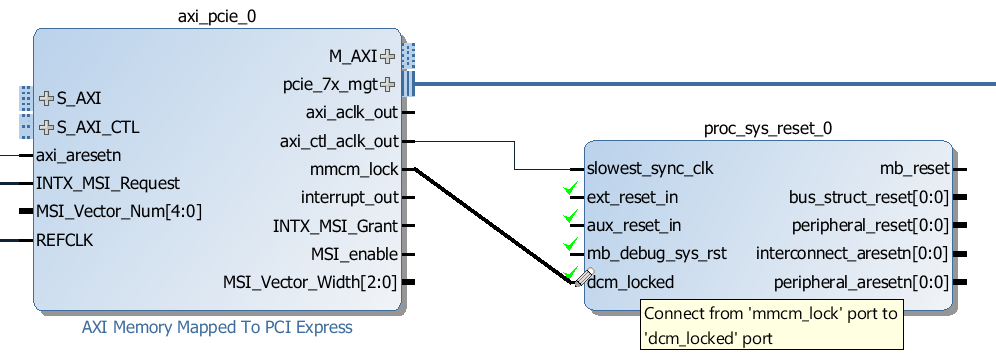

Connect the mmcm_lock output of the AXI-PCIe block to the dcm_locked input of the proc_sys_reset_0 Processor System Reset.

-

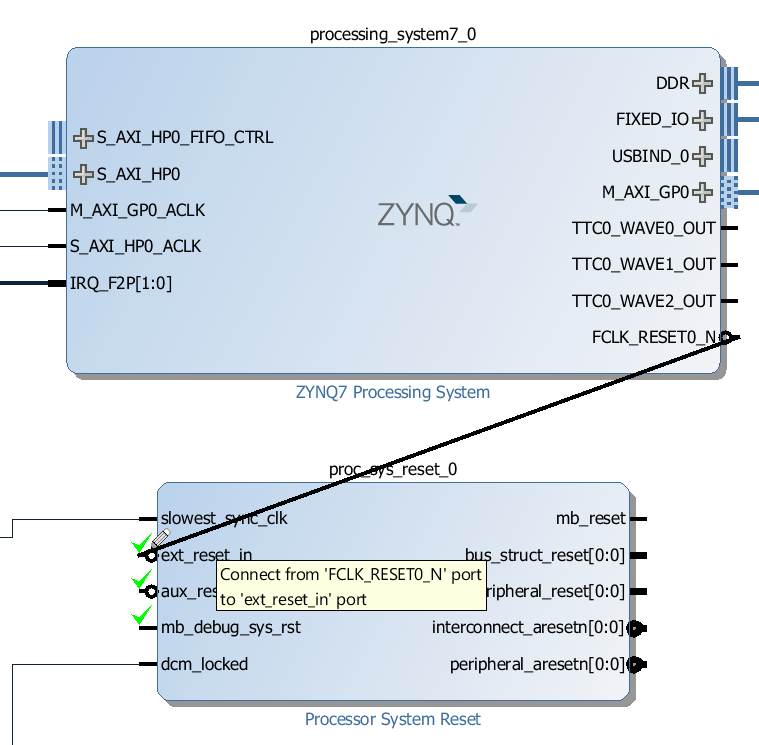

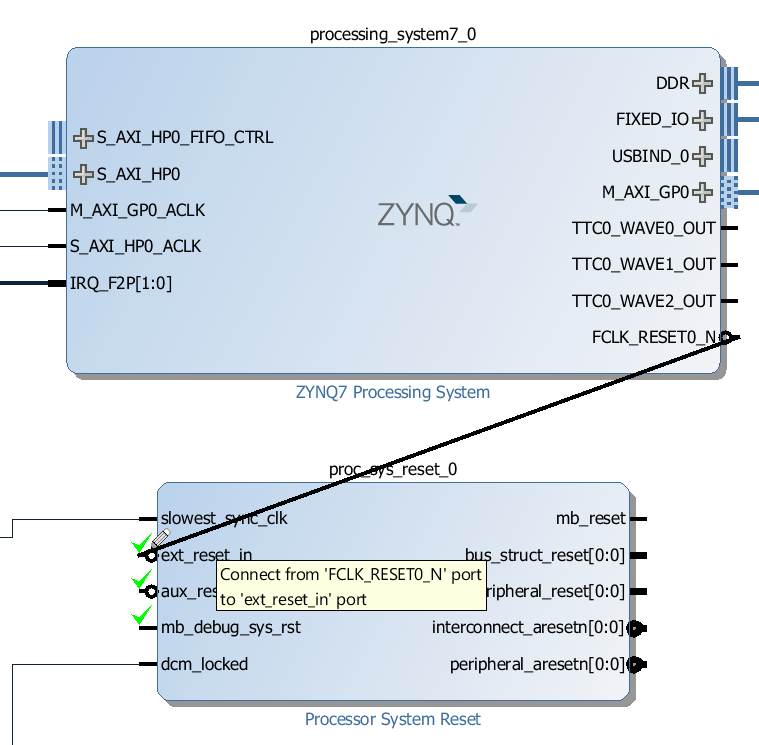

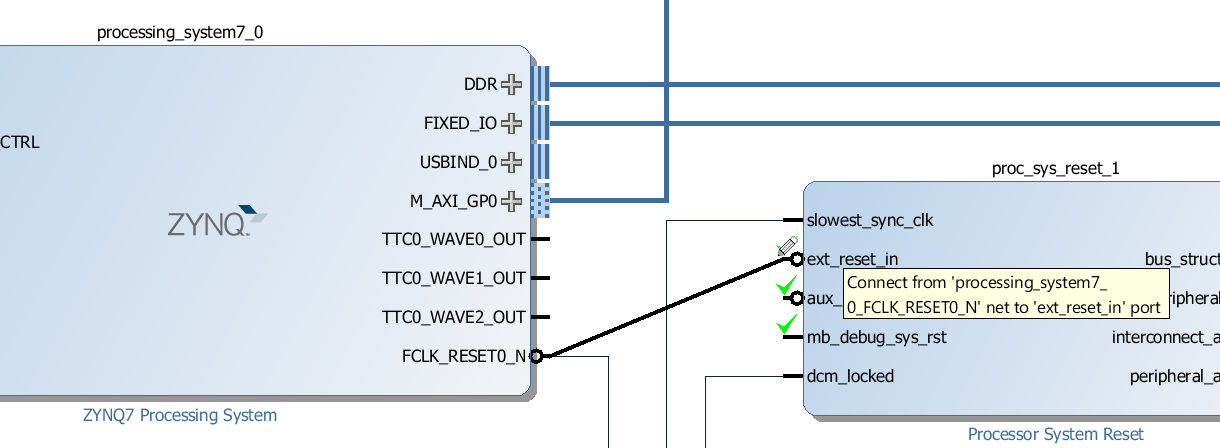

Connect the FCLK_RESET0_N output of the Zynq PS to the ext_reset_in input of the proc_sys_reset_0 Processor System Reset.

-

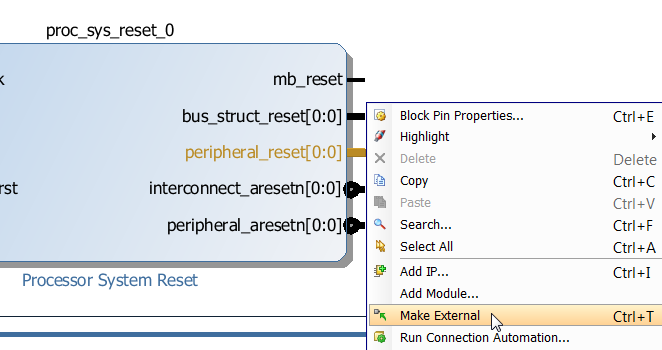

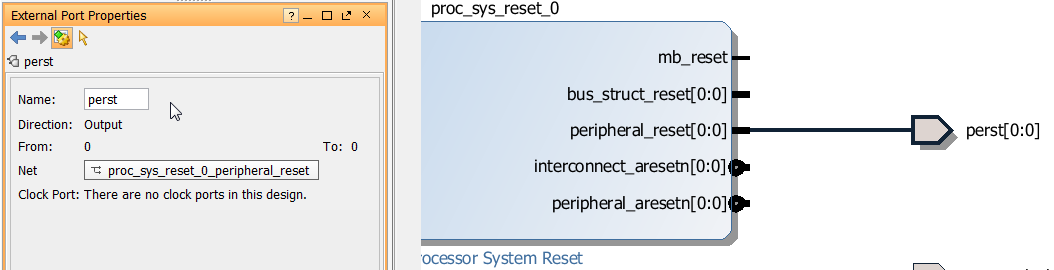

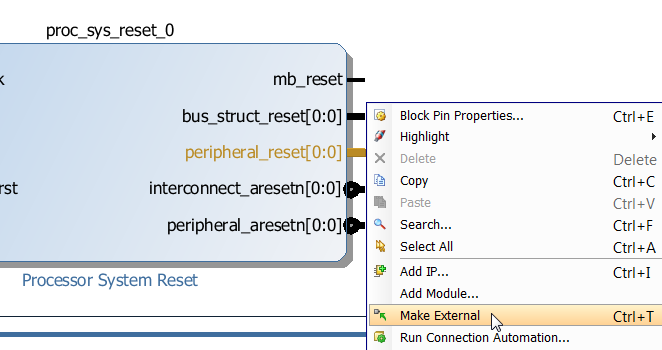

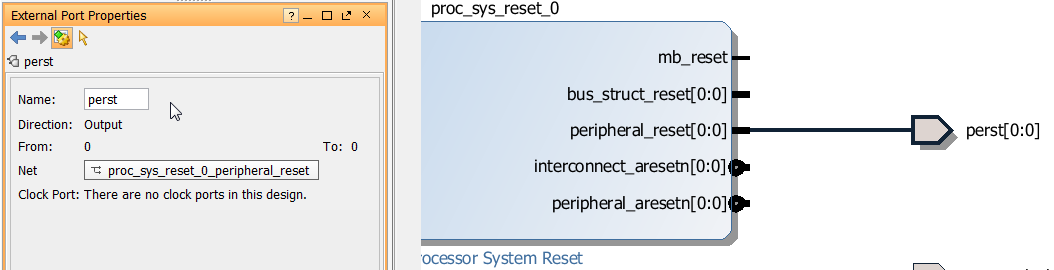

Right click on the peripheral_reset output of the proc_sys_reset_0 Processor System Reset and click “Make External”. This will be the signal that drives the PCIe reset (PERST) input of the SSD.

-

Click on the port we just created and rename it to “perst”.

-

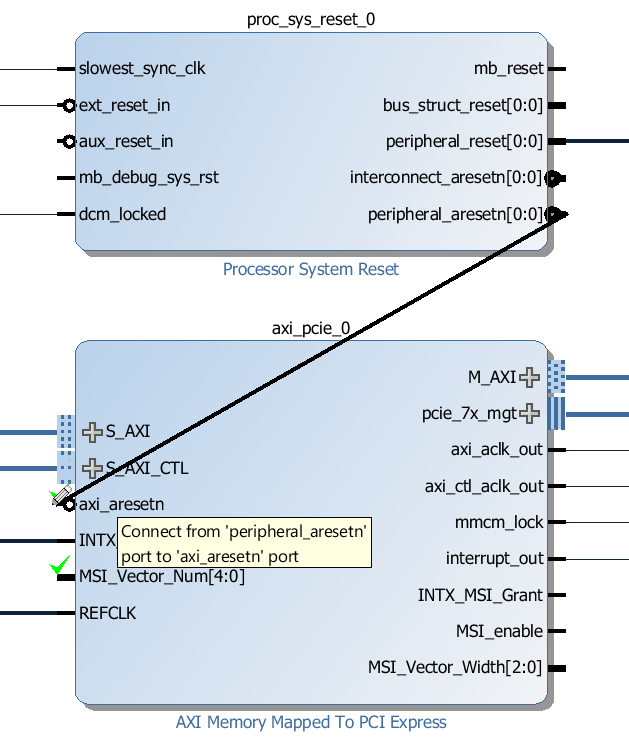

Connect the peripheral_aresetn output of proc_sys_reset_0 to the axi_aresetn input of the axi_pcie_0 block.

-

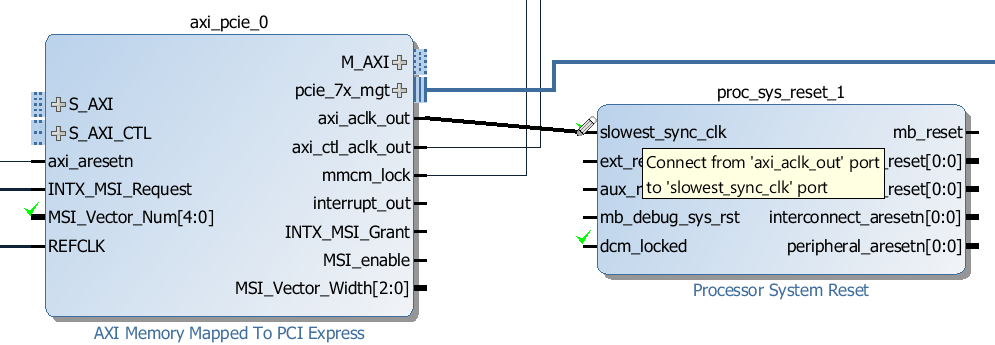

From the IP Catalog, add another “Processor System Reset” to the design - this one should automatically be called proc_sys_reset_1.

-

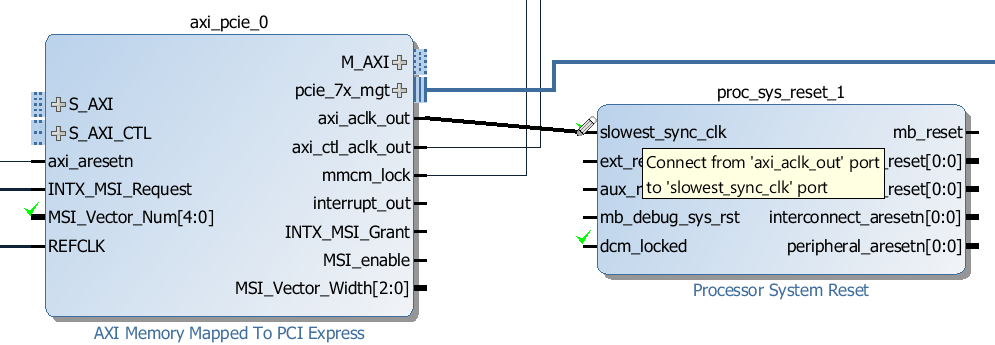

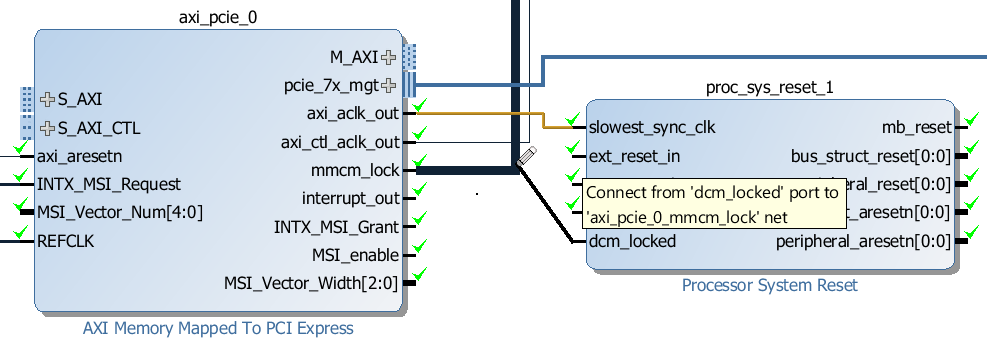

Connect the axi_aclk_out output of the AXI-PCIe block to the slowest_sync_clk input of the proc_sys_reset_1 Processor System Reset.

-

Connect the mmcm_lock output of the AXI-PCIe block to the dcm_locked input of the proc_sys_reset_1 Processor System Reset.

-

Connect the FCLK_RESET0_N output of the Zynq PS to the ext_reset_in input of the proc_sys_reset_1 Processor System Reset.

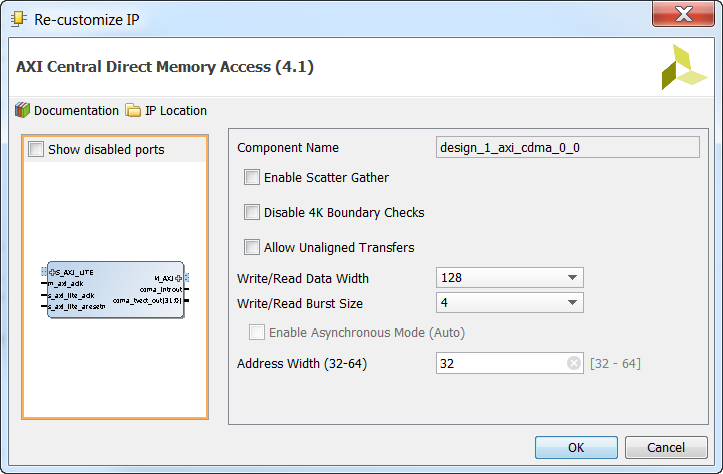

Add the CDMA

We’re going to add a Central DMA to this design to allow us to make DMA transfers between the PCIe end-point and the DDR3 memory. We won’t actually test it, that will be the subject of another tutorial, but most PCIe designs can benefit from having a Central DMA because it allows for higher throughput over the PCIe link using burst transfers.

-

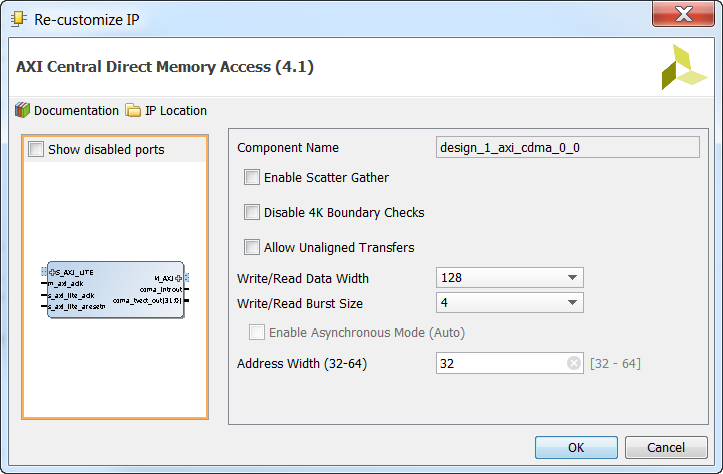

Add an “AXI Central Direct Memory Access” from the IP Catalog to the block design.

-

Double click on the CDMA block to open the configuration window. Disable Scatter Gather and set “Write/Read Data Width” to 128 as shown in the image below.

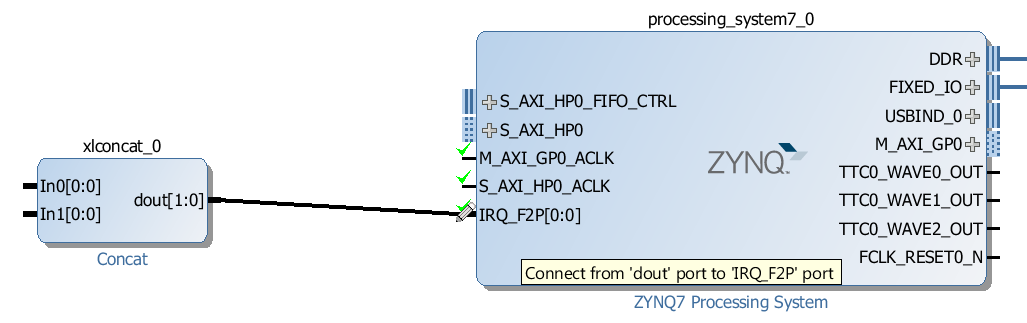

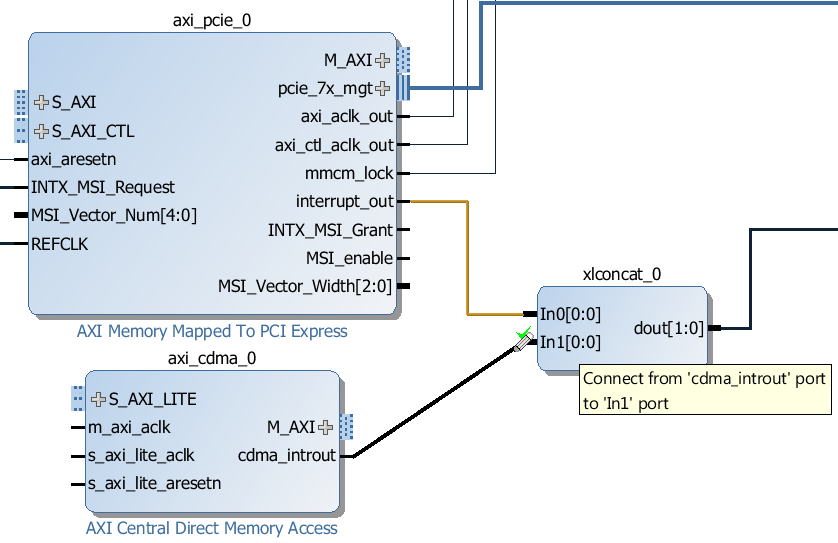

Add the Interrupt Concat

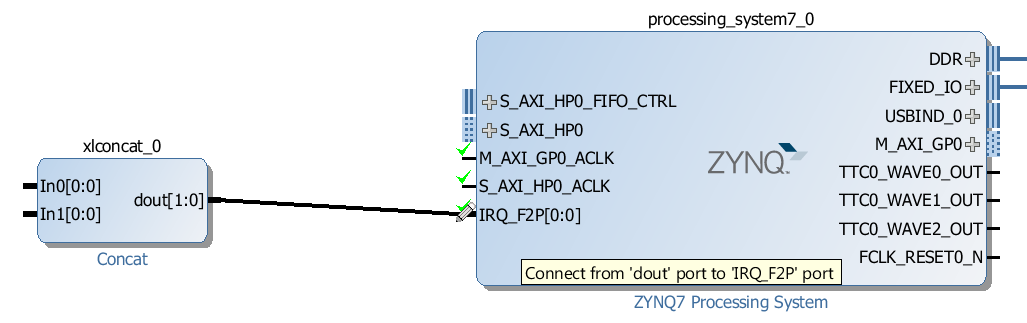

To connect interrupts to the IRQ_F2P port of the Zynq PS, we need to use a Concat.

-

From the IP Catalog, add a “Concat” to the block design.

-

By default, it should have two inputs - that’s perfect for us, as we only have 2 interrupts to connect. Connect the output of the Concat to the

IRQ_F2P port of the Zynq PS.

-

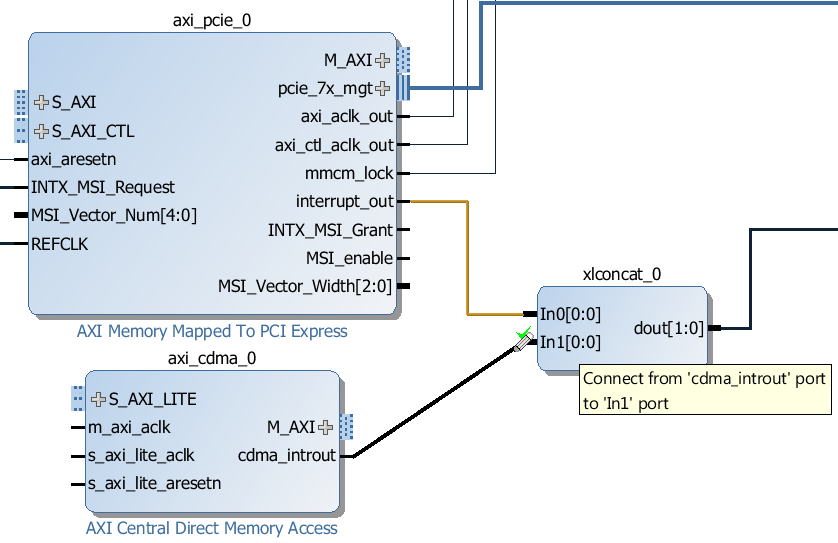

Connect the

interrupt_out output of the AXI-PCIe block to the “In0” input of the Concat.

-

Connect the

cdma_introut output of the CDMA block to the “In1” input of the Concat.

Add the AXI Interconnects

Now the last thing to do is add the AXI Interconnects and wire up all the AXI interfaces.

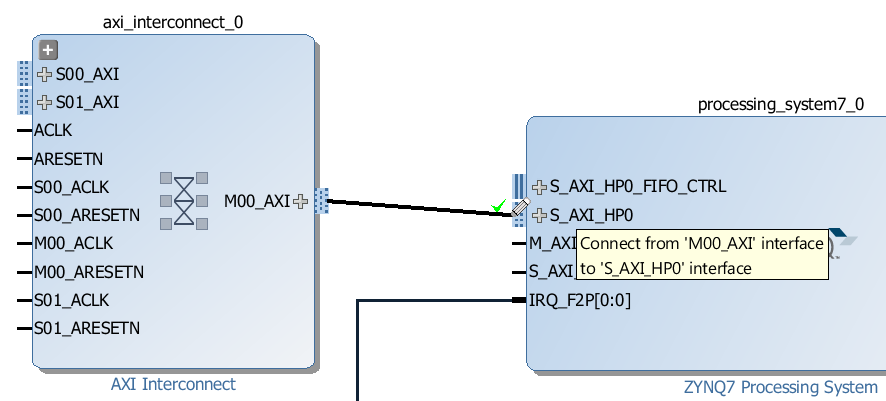

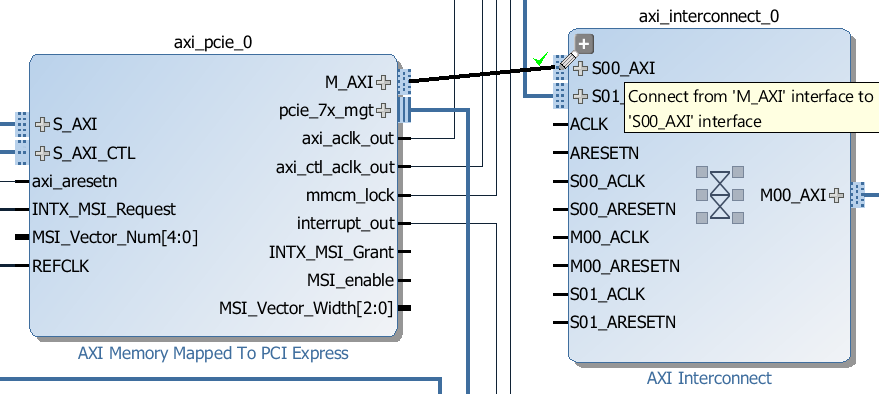

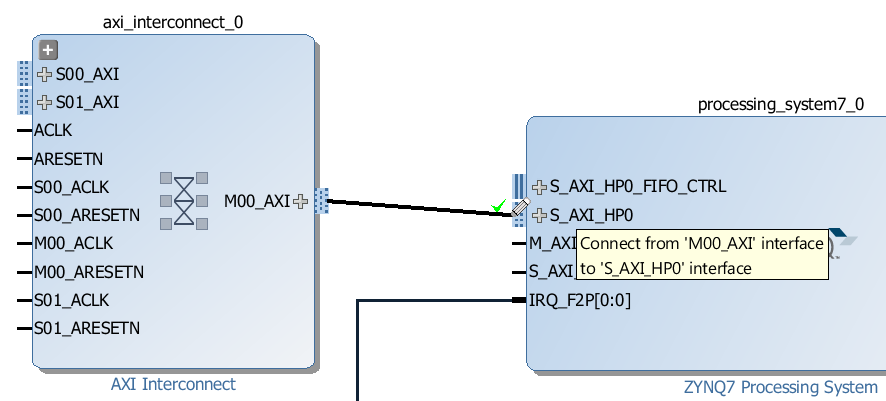

axi_interconnect_0:

-

From the IP Catalog, add an “AXI Interconnect” to the block design - this one should be automatically named

axi_interconnect_0. We’ll use this to create two ports for accessing the DDR3 memory.

-

Re-configure it to have 2 slave ports and 1 master port.

-

Connect the

M00_AXI port of the axi_interconnect_0 to the S_AXI_HP0 port of the Zynq PS.

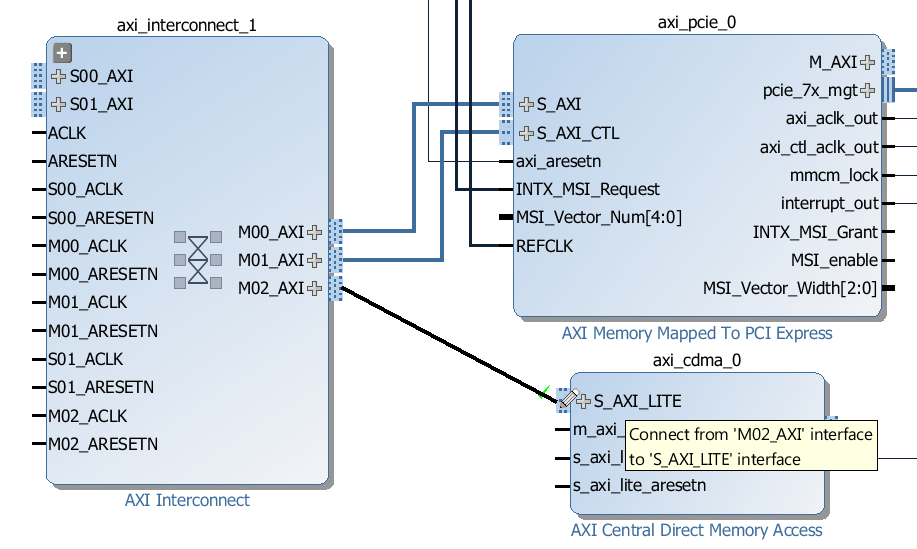

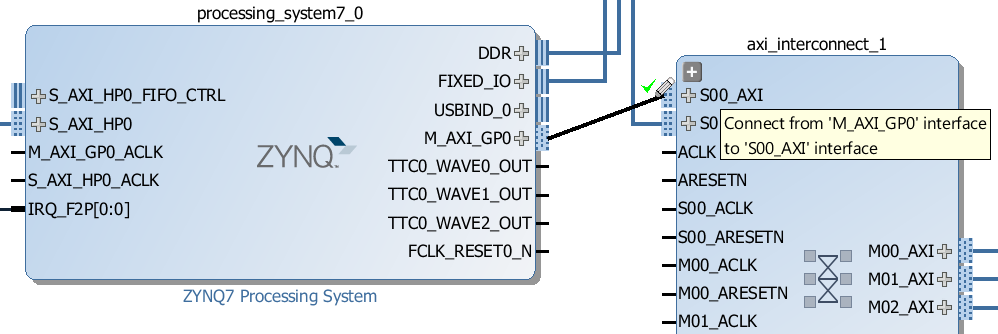

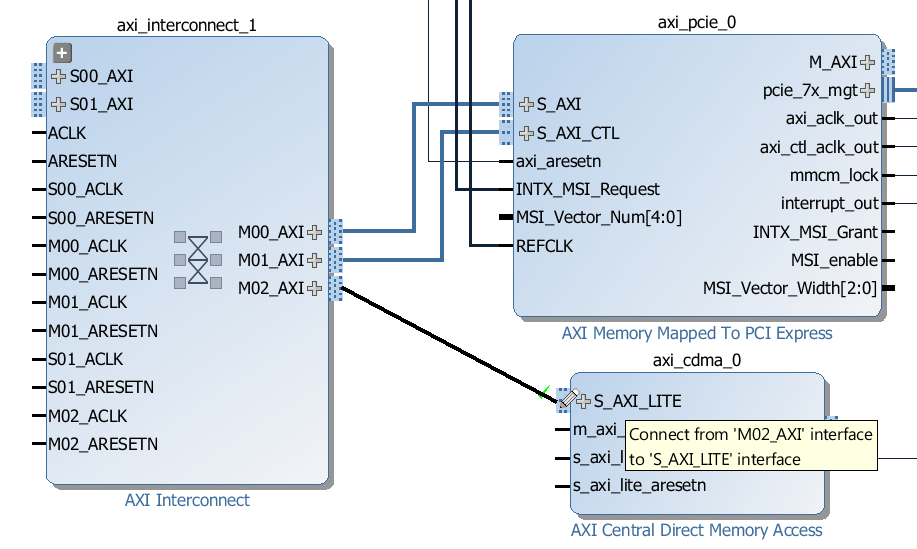

axi_interconnect_1:

-

From the IP Catalog, add another “AXI Interconnect” to the block design - this one should be automatically named

axi_interconnect_1. We’ll use this to create two ports for accessing the AXI-PCIe control interface, the PCIe end-point and the CDMA control interface.

-

Re-configure it to have 2 slave ports and 3 master ports.

-

Connect the

M00_AXI port of the axi_interconnect_1 to the S_AXI port of the AXI-PCIe block.

-

Connect the

M01_AXI port of the axi_interconnect_1 to the S_AXI_CTL port of the AXI-PCIe block.

-

Connect the

M02_AXI port of the axi_interconnect_1 to the S_AXI_LITE port of the CDMA block.

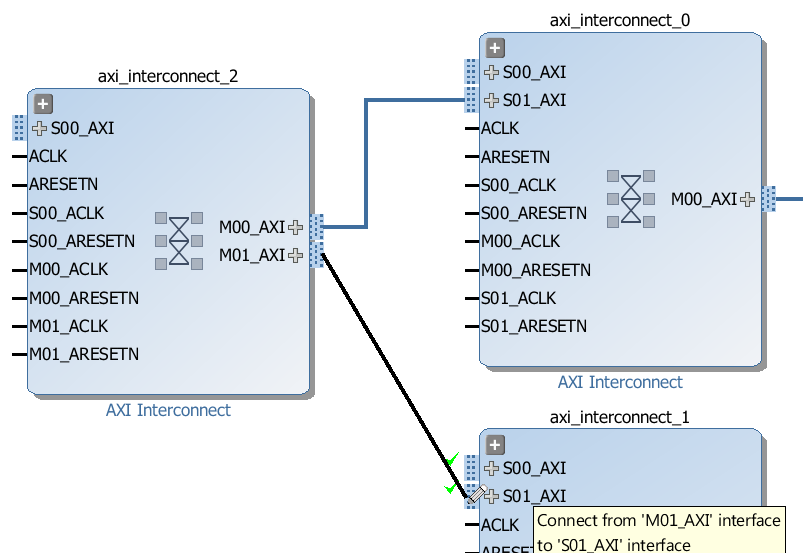

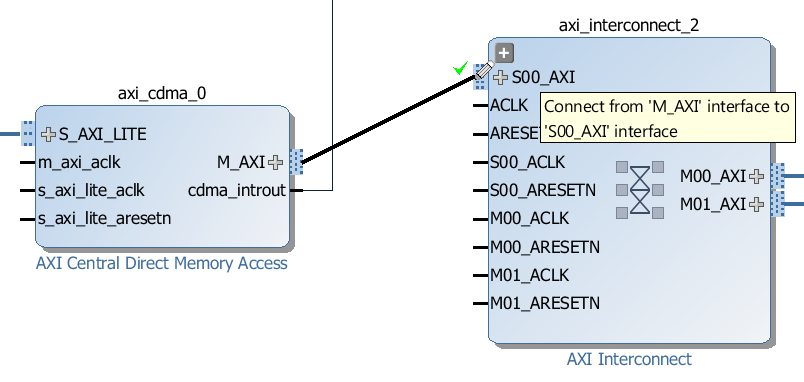

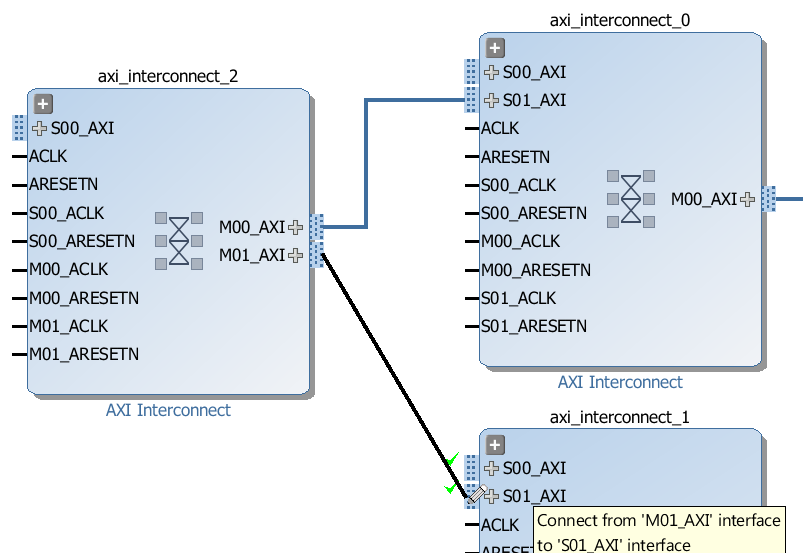

axi_interconnect_2:

-

From the IP Catalog, add another “AXI Interconnect” to the block design - this one should be automatically named

axi_interconnect_2. We’ll use this to allow the CDMA to access both the DDR3 memory and the PCIe end-point.

-

By default, it should already have 1 slave port and 2 master ports, which is exactly what we need.

-

Connect the

M00_AXI port of the axi_interconnect_2 to the S01_AXI port of the axi_interconnect_0 (the first interconnect we created).

-

Connect the

M01_AXI port of the axi_interconnect_2 to the S01_AXI port of the axi_interconnect_1 (the second interconnect we created).

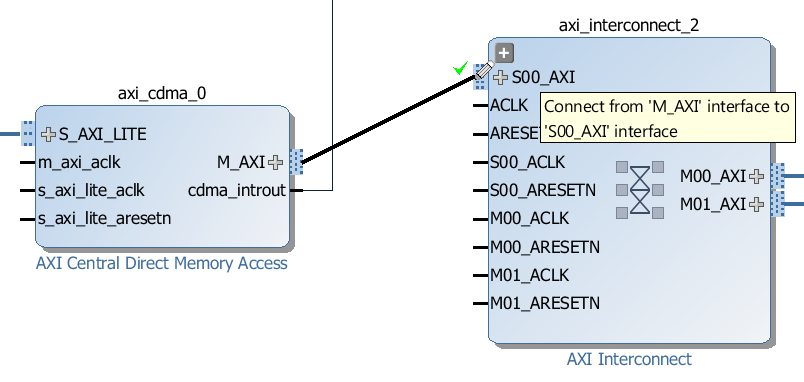

Now for the rest of the connections:

-

Connect the

M_AXI port of the CDMA block to the S00_AXI port of the axi_interconnect_2.

-

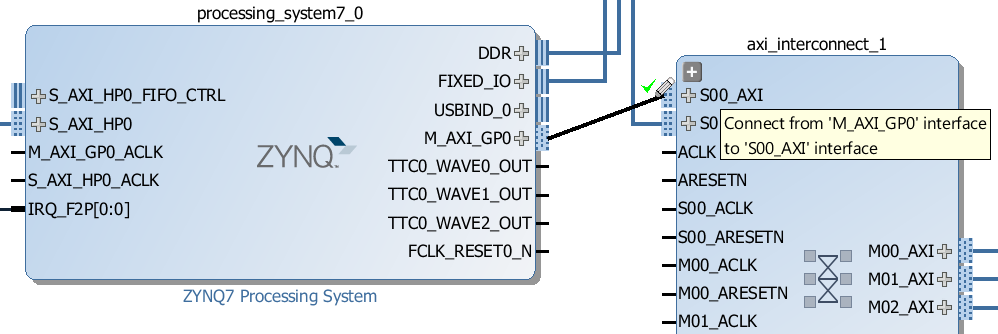

Connect the

M_AXI_GP0 port of the Zynq PS to the S00_AXI port of the axi_interconnect_1.

-

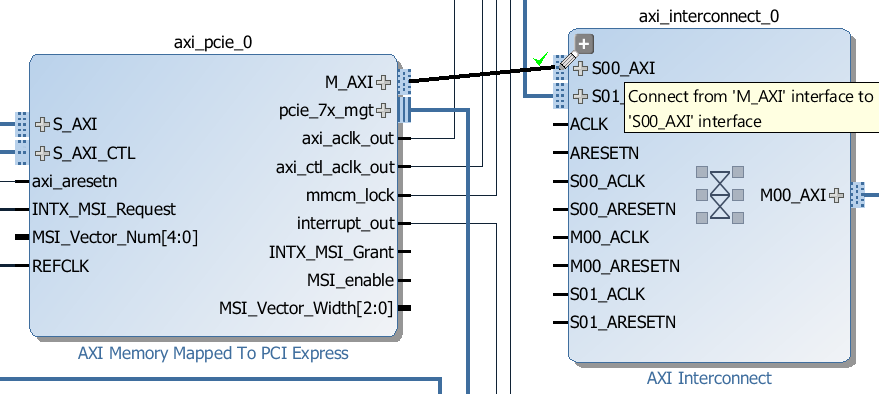

Connect the

M_AXI port of the AXI-PCIe block to the S00_AXI port of the axi_interconnect_0.

Connect all the clocks

Let’s start by hooking up the main clock axi_aclk_out:

-

Connect

axi_aclk_out clock to the M_AXI_GP0_ACLK and S_AXI_HP0_ACLK inputs of the Zynq PS.

-

Connect

axi_aclk_out clock to the m_axi_aclk and s_axi_lite_aclk inputs of the CDMA.

-

Connect

axi_aclk_out clock to the “ACLK”, S00_ACLK, M00_ACLK and S01_ACLK inputs of the axi_interconnect_0 (ie. all of the clock inputs).

-

Connect

axi_aclk_out clock to the “ACLK”, S00_ACLK, M00_ACLK, S01_ACLK and M02_ACLK inputs of the axi_interconnect_1 (notice that we do not connect M01_ACLK yet!).

-

Connect

axi_aclk_out clock to the “ACLK”, S00_ACLK, M00_ACLK and M01_ACLK inputs of the axi_interconnect_2 (ie. all of the clock inputs).

Now the control clock axi_ctl_aclk_out:

-

Connect

axi_ctl_aclk_out clock to the M01_ACLK input of the axi_interconnect_1.

Connect all the resets

-

Connect the

interconnect_aresetn output of the proc_sys_reset_1 Processor System Reset to the “ARESETN” input of ALL 3 AXI Interconnects.

-

Connect the

peripheral_aresetn output of the proc_sys_reset_1 Processor System Reset to the following inputs:

-

CDMA input

s_axi_lite_aresetn

-

“axi_interconnect_0” inputs

S00_ARESETN, M00_ARESETN and S01_ARESETN

-

“axi_interconnect_1” inputs

S00_ARESETN, M00_ARESETN, S01_ARESETN and M02_ARESETN (notice that we do not connect M01_ARESETN yet!)

-

“axi_interconnect_2” inputs

S00_ARESETN, M00_ARESETN and M01_ARESETN

-

Connect the

peripheral_aresetn output of the proc_sys_reset_0 Processor System Reset to the M01_ARESETN of axi_interconnect_1.

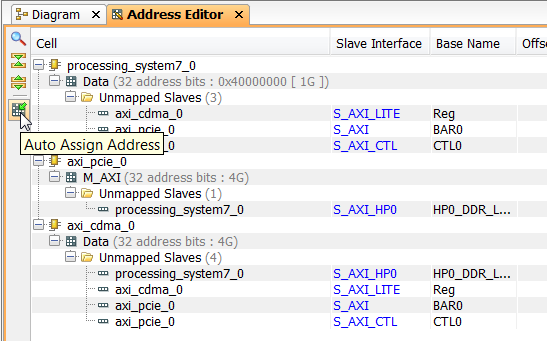

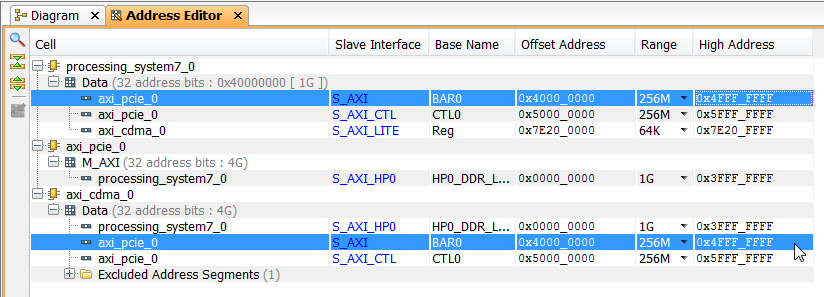

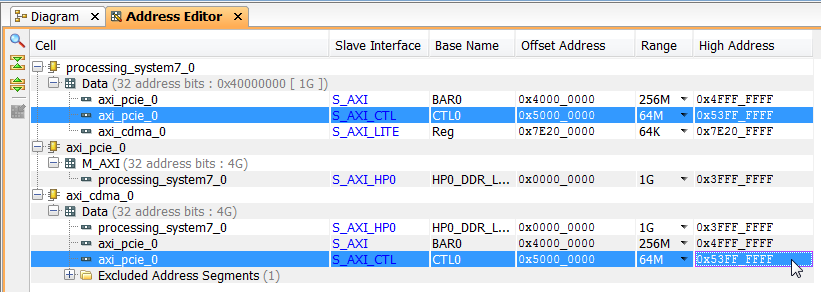

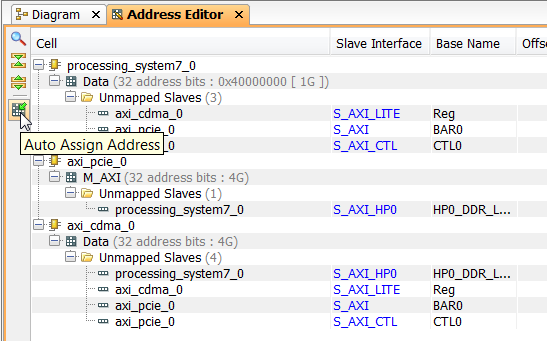

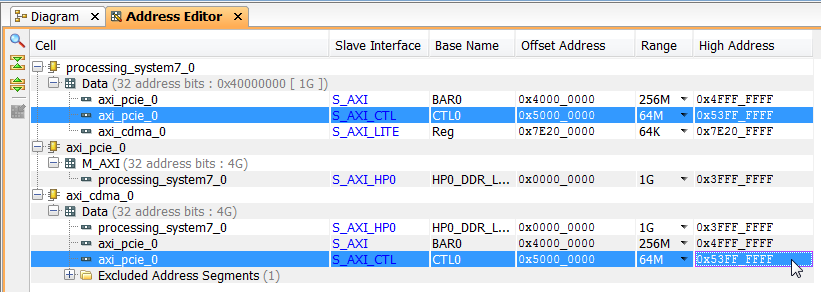

Assign addresses

-

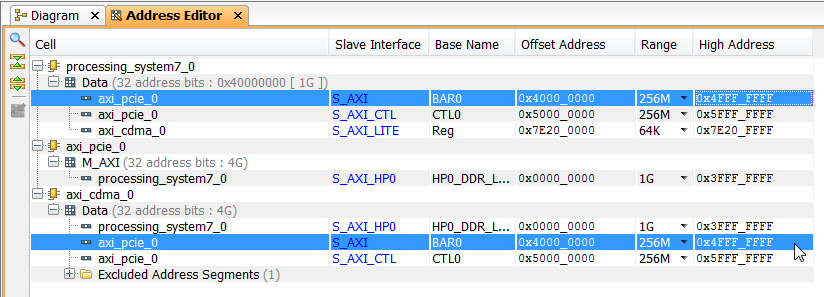

Open the “Address Editor” tab and click the “Auto Assign Address” button.

-

There will be an error generated because Vivado will try to assign 1G to the PCIe BAR0 and 256M to the PCIe control interface (CTL0). Change the size of PCIe BAR0 to 256M and use the “Auto Assign Address” button again. It should succeed this time and you will have the addresses shown below.

-

Finally, we’ll need to set the size of the PCIe control interface to 64M, to avoid a memory allocation problem in PetaLinux later.

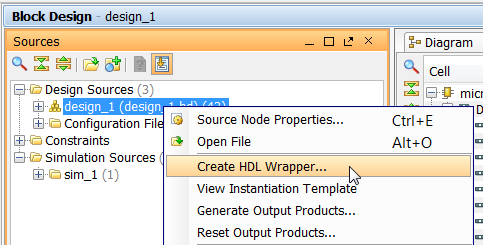

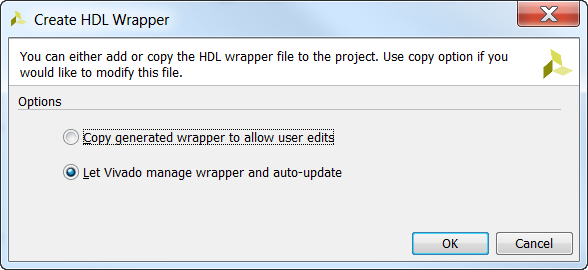

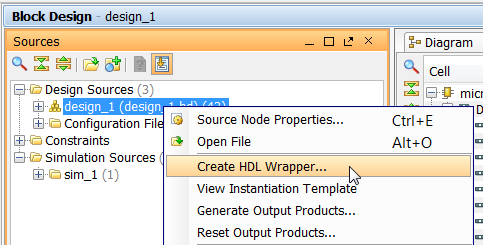

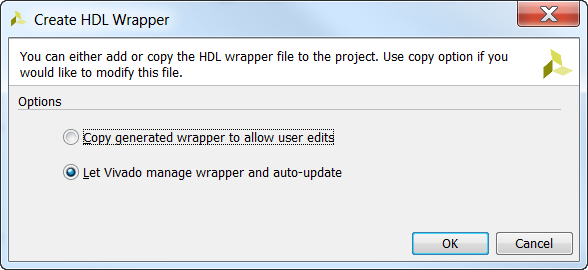

Create the HDL wrapper

Now the block diagram is complete, so we can save it and create a HDL wrapper for it.

-

Open the “Sources” tab from the Block Design window.

-

Right-click on “design_1” and select “Create HDL wrapper” from the drop-down menu.

-

From the “Create HDL wrapper” window, select “Let Vivado manage wrapper and auto-update”. Click “OK”.

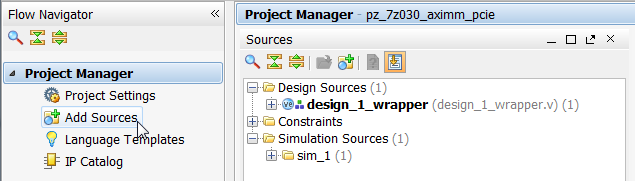

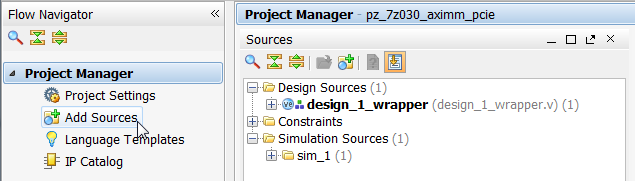

Add the constraints

We must now add our constraints to the design for assignment of the PCIe integrated block, the gigabit transceivers, the reference clocks and a few other signals.

-

Download the constraints file from this link: Constraints for Zynq PCIe Root Complex design

-

Save the constraints file somewhere on your hard disk.

-

From the Project Manager, click “Add Sources”.

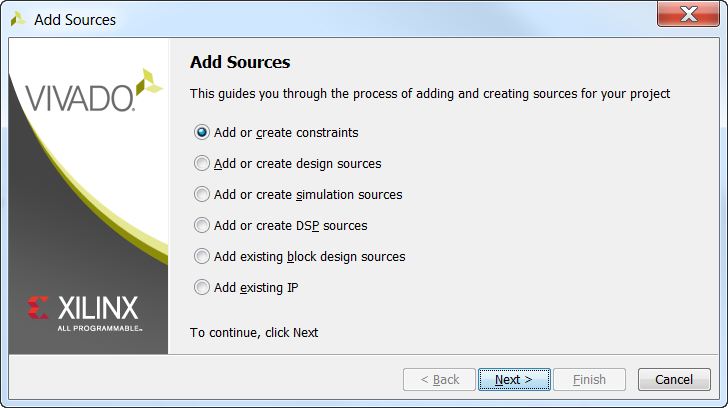

-

Then click “Add or create constraints”.

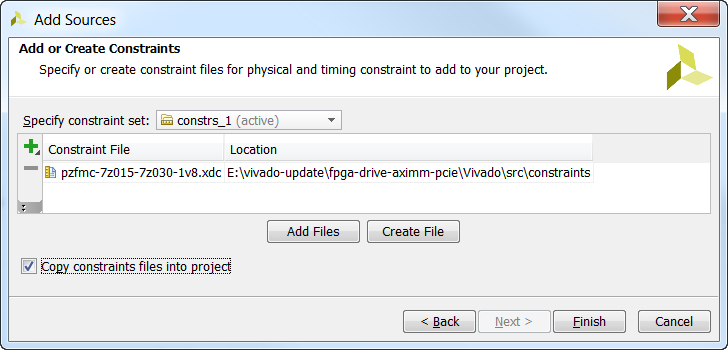

-

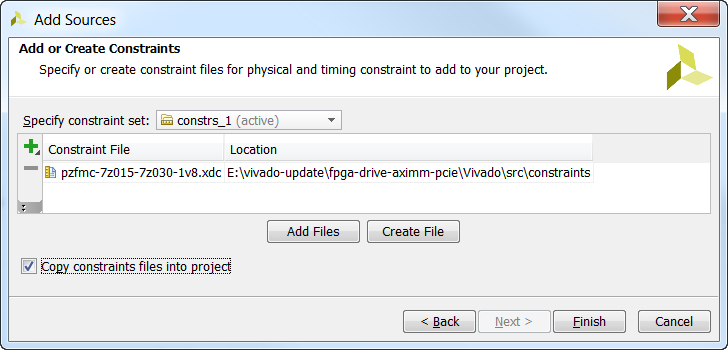

Then click “Add files” and browse to the constraints file that you downloaded earlier. Select the constraints file, then click “OK”. Now tick “Copy constraints files into project” and click “Finish”.

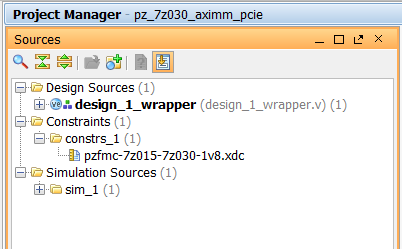

-

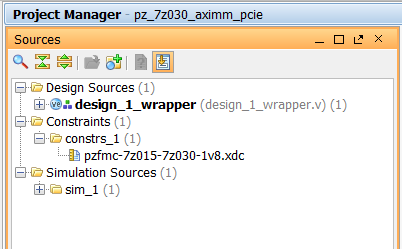

You should now see the constraints file in the Sources window.

You’re all done!

Testing the project on hardware

In the next and final part of this tutorial series, we will test our design on hardware by connecting an NVMe PCIe SSD to our FPGA. We’ll start by running a simple stand-alone application that will check the PCIe bus status and enumerate the end-points. Then we’ll generate a PetaLinux build that is customized to our hardware and we’ll bring up the SSD from the command line.

Sources Git repository

The sources for re-generating this project automatically can be found on Github here: FPGA Drive PCIe Root Complex design

Other useful resources

Here are some other useful resources for creating PCI Express designs:

If you have any questions about this tutorial, or if you run into problems, please leave me a comment below.